-

Pros

- Easy to carry out

- Intuitive

-

Cons

- Crude

- Only applies to on-balance sheet IR risk

- Looks at impact on income, not asset / liability values

- Results sensitive to choice of horizon

PV01 Analysis

-

Also applies to fixed income positions

-

Addresses what will happen to bond price if interest rises by 1 basis point

-

Price bond at current interest rates

-

Price bond assuming rate rise by 1 bp

-

Calculate loss as current minus prospective bond prices

Duration Analysis

-

Another traditional approach to IR risk assessment

-

Duration

- weights are present values of each cashflow, divided by PV of all cashflows

-

Duration indicates sensitivity of bond price to change in yield:

-

Can approximate duration using simple algorithm as

-

Duration is a linear function: duration of porfolio is sum of durations of bonds in portfolio

-

Duration assumes

-

Duration ignores embedded options

-

Duration analysis supposes that yield curve shifts in parallel

Convexity

-

If duration takes a

-

Convexity is the second order term in a Taylor series approximation

- Convexity is defined as

-

Convexity term gives a refinement to the basic duration approximation

-

In practice, convexity adjustment often small

-

Convexity a valuable property

-

Makes losses smaller, gains bigger

-

Can approximate convexity as

Pros and cons of duration-convexity

-

Pros

- Easy to calculate

- Intuitive

- Looks at values, not income

-

Cons

- Crude (only first-order approx, problems with non-parallel moves in spot rate curve, etc.)

- Often inaccurate even with refinements (e.g., convexity)

- Applies only to IR risks

Scenario Analysis

-

'What if' analysis – set out scenarios and work out what we gain/lose

-

Select a set of scenarios, postulate cashflows under each scenario, use results to come to a view about exposure

-

SA not easy to carry out

- Much hinges on good choice of scenario

- Need to ensure that scenarios do not involve contradictory or implausible assumptions

- Need to think about interrelationships involved

- Want to ensure that all important scenarios covered

-

SA tells us nothing about probabilities

- Need to use judgement to determine significance of results

-

SA very subjective

-

Much depends on skill and intuition of analyst

Portfolio Theory

-

Starts from premise that investors choose between expected return and risk

- Risk measured by std of portfolio return

-

Wants high expected return and low risk

-

Investor chooses portfolio based on strength of preferences for expected return and risk

- Investor determines efficient portfolios, and chooses the one that best fits preferences

-

Investor who is highly (slightly) risk averse will choose safe (risky) portfolio

-

Key insight is that risk of any position is not its std, but the extent to which it contributes to portfolio std

- Asset might have a high std, but contribute small risk, and vice versa

-

Risk is measured by beta (why?) – which depends on correlation of asset return with portfolio return

-

High beta implies high correlation and high risk

-

Low beta implies low correlation and low risk

-

Zero beta implies no risk

-

Ideally, looking for positions with negative beta

- These reduce portfolio risk

-

PT widely used by portfolio managers

-

But runs into implementation problems

- Estimation of beta highly problematic

- Each beta is specific to data and portfolio

- Need a long data set to get reliable result

-

Practitioners often try to avoid some of these problems by working with `the' beta (as in CAPM)

- But this often requires CAPM assumptions to hold

- One market risk factor, etc.

-

CAPM discredited (Fama-French, etc.)

Derivatives risk measures

-

Can measure risks of derivatives positions by their Greeks

- Delta

- Gamma

- Rho

- Theta

- Vega

- Delta

-

Use of Greeks requires considerable skill

-

Need to handle different signals at the same time

-

Risks measures only incremental

- Work against small changes in exogenous factors

- Can be Greek-hedged, but still be very exposed if there are large exogenous shifts

- Hedging strategies require liquid markets, and can fail if market liquidity dries up (as in Oct 1987)

-

Risk measures are dynamic

- Can change considerably over time

- E.g., gamma of ATM option goes to infinity

Value at Risk (VaR)

Value at Risk

-

In late 1970s and 1980s, major financial institutions started work on internal models to measure and aggregate risks across institution

-

As firms became more complex, it was becoming more difficult but also more important to get a view of firmwide risks

-

Firms lacked the methodology to do so

- Can't simply aggregate risks from sub-firm level

RiskMetrics

- Bestknown system is RiskMetrics developed by JP Morgan

- Supposedly developed by JPM staff to provide a `4:15' report to CEO, Dennis Weatherstone.

- What is maximum likely trading loss over next day, over whole firm?

- To develop this system, JPM used portfolio theory, but implementation issues were very difficult

-

Staff had to

- Choose measurement conventions

- Construct data sets

- Agree statistical assumptions

- Agree procedures to estimate volatilities and correlations

- Set up computer systems for estimation, etc.

-

Main elements of system working by around 1990

-

Then decided to use the '4:15' report

- A one-day, one-page summary of the bank's market risk to be delivered to the CEO in the late afternoon (hence the ''4:15'')

-

Found that it worked well

-

Sensitised senior management to risk-expected return tradeoffs, etc.

-

New system publicly launched in 1993 and attracted a lot of interest

-

Other firms working on their systems

- Some based on historical simulation, Monte Carlo simulation, etc.

-

JPM decided to make a lower-grade version of its system publicly available

-

This was RiskMetrics system launched in Oct 1994

- Stimulated healthy debate on pros/cons of RiskMetrics, VaR, etc.

-

Subsequent development of other VaR systems, applications to credit, liquidity, op risks, etc.

Portfolio theory and VaR

-

PT interprets risk as std of portfolio return, VaR interprets it as maximum likely loss

- VaR notion of risk more intuitive

-

PT assumes returns are normal or near normal, whilst VaR systems can accommodate wider range of distributions

-

VaR approaches can be applied to a wider range of problems

- PT has difficulty applying to non-market risks, whereas VaR applies more easily to them

-

VaR systems not all based on portfolio theory

- Variance covariance systems are; others are not

Attractions of VaR

-

VaR provides a single summary measure of possible portfolio losses

-

VaR provides a common consistent measure of risk across different positions and risk factors

-

VaR takes account of correlations between risk factors

Uses of VaR

-

Can be used to set overall firm risk target

-

Can use it to determine capital allocation

-

Can provide a more consistent, integrated treatment of different risks

-

Can be useful for reporting and disclosing

-

Can be used to guide investment, hedging, trading and risk management decisions

-

Can be used for remuneration purposes

-

Can be applied to credit, liquidity and op risks

Criticisms of VaR

-

VaR was warmly embraced by most practitioners, but not by all

-

Concern with the statistical and other assumptions underlying VaR models

- Hoppe and Taleb

-

Concern with imprecision of VaR estimates

- Beder

-

Concern with implementation risk

- Marshall and Siegel

-

Concern with risk endogeneity

- Traders might ‘game’ VaR systems

- Write deep out-of-the-money options

-

Uses of VaR as a regulatory constraint might destabilise financial system or obstruct good practice

-

Concern that VaR might not be best risk measure

- Limits of VaR, development of coherent risk measures

Advanced Risk Models: Univariate

Value-at-Risk (VaR)

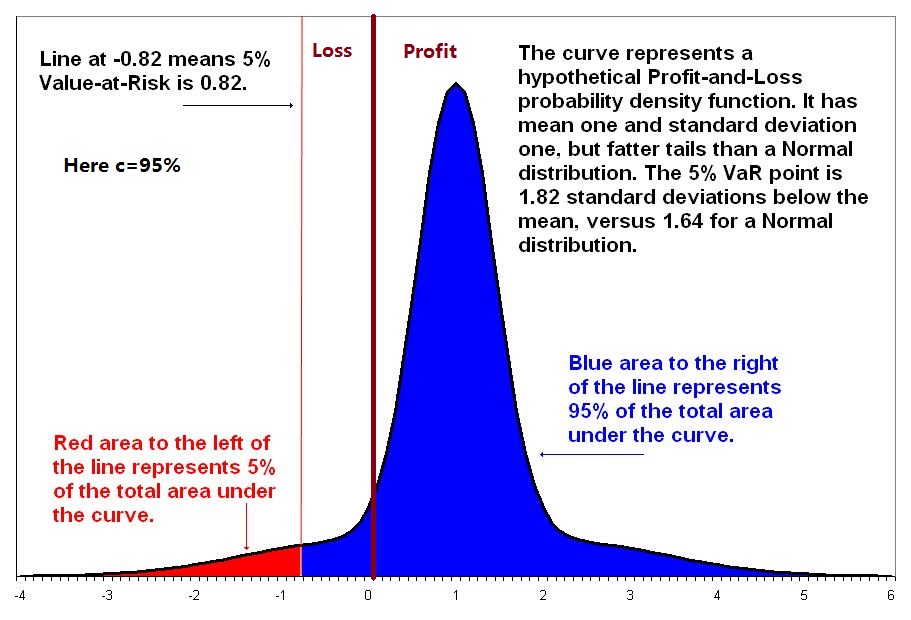

- VaR is the maximum loss over a target horizon such that there is a low, prespecified probability that actual loss will be larger.

- Formula

or,

VaR Parameters: The Confidence Level (cl)

- VaR rises with confidence level (cl) at an increasing rate

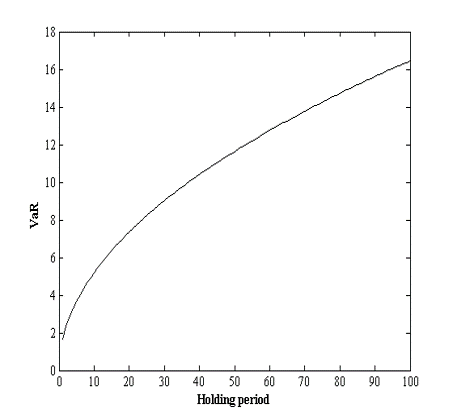

VaR Parameters: The Holding Period (hp)

- If mean is zero, VaR rises with square root of holding period

- 'Square root rule':

- Daily innovations must be i.i.d. standard normal (why?)

- Empirical plausibility of SRR very doubtful (why?)

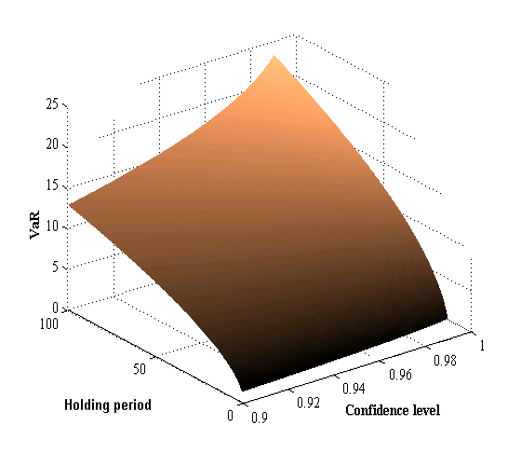

VaR Surface

- VaR surface is much more revealing

- A single VaR number is just a point on the surface – doesn’t tell much

- With zero mean, get spike at high cl and high hp

Determine the Confidence Level

-

High cl if we want to use VaR to set capital requirements

- E.g., 99% under Basel

-

Lower if we want to use VaR

- to set position limits

- for reporting/disclosure

- for backtesting (we will discuss it later)

Determine the Holding Period

-

Depends on investment/reporting horizons

-

Daily common for cap market institutions

-

10 days (or 2 weeks) for banks under Basel

-

Can depend on liquidity of market – hp should be equal to liquidation period

-

Short hp makes it easier to justify assumption of unchanging portfolio

-

Short hp preferable for model validation/backtesting requirements

- Short hp means more data to use

Limitation of VaR

-

VaR estimates subject to error

-

VaR models subject to (considerable!) model risk

- Beder

-

VaR systems subject to implementation risk

- Marshal and Siegel

-

But these problems common to all risk measurement systems

VaR uninformative of tail losses

-

VaR tells us most we can lose at a certain probability, i.e., if tail event does not occur

-

VaR does not tell us anything about what might happen if tail event does occur

-

Trader can spike firm by selling out of the money options

- Usually makes a small profit, occasionally a very large loss

- If prob of loss low enough, such a position appears to have no risk, but can be very dangerous

-

Two positions with equal VaRs not necessarily equally risky, because tail events might be very different

-

Solution to use more VaR information – estimate VaR at higher cl

VaR creates perverse incentives

-

VaR-based decision calculus can be misleading, because it ignores low-prob, high-impact events

- Events with probs less than VaR tail prob ignored

-

Additional problems if VaR is used in a decentralized system

-

VaR-constrained traders/managers have incentives to 'game' the VaR constraint

- Sell out of the money options, etc.

VaR can discourage diversification

-

VaR of diversified portfolio can be larger than VaR of undiversified one

-

Example

- 100 possible states, 100 assets, each making a profit in 99 (different) states, and a high loss in one

- Diversified portfolio is certain to experience a high loss

- Undiversified portfolio is not

- Hence, VaR of diversified portfolio higher than VaR of undiversified one

VaR not subadditive

- A risk measure

-

Aggregating individual risks does not increase overall risk

-

Important because: Adding risks together gives conservative (over-) estimate of portfolio risk – want bias to be conservative

-

If risks not subadditive and VaR used to measure risk

- Firms tempted to break themselves up to release capital

- Traders on organized exchanges tempted to break up accounts

- In both cases, problem of residual risk exposure

-

Subadditivity is highly desirable

-

But VaR is only subadditive if risks are normal or elliptical

-

VaR not subadditive for arbitrary distributions

Coherent Measure of Risk

Coherent Risk Measures

-

Let

- Monotonicity: if

- Translation invariance:

- Homogeneity:

- Subadditivity:

- Monotonicity: if

-

Homogeneity and Monotonicity imply convexity, which is important

-

Translation invariance means that adding a sure amount to our end-period portfolio will reduce loss by amount added

Implications of coherence

-

Any coherent risk measure is the maximum loss on a set of generalized scenarios

- GS is set of loss values and associated probs

-

Maximum loss from a subset of scenarios is coherent

-

Outcomes of stress tests are coherent

- Coherence theory provides a theoretical justification for stress testing!

-

Coherence risk measures can be mapped to user’s risk preferences

-

Each coherent measure has a weighting function

-

-

Can choose a coherent measure to suit risk preferences

Alternative Measures of Risk: CVaR

- The Conditional VaR (CVaR) is the expected loss, given a loss exceeding VaR

-

it is also called expected shortfall, tailed conditioal expectation, conditional loss, or expected tail loss

-

VaR tells us the most we can lose if a tail event does not occur, CVaR tells us the amount we expect to lose if a tail event does occur

-

CVaR is coherent

CVaR is better than VaR

-

Tell us what to expect in bad states

- VaR tells us nothing

-

CVaR-based decision rule valid under more general conditions than a VaR-based one

- CVaR rule valid under second-order stochastic dominance

- VaR rule valid under first-order stochastic dominance

- FOSD more stringent than SOSD

-

CVaR coherent, and therefore always subadditive

-

CVaR does not discourage risk diversification, VaR sometimes does

-

CVaR-based risk surface always convex

- Convexity ensures that portfolio optimization has a unique well-behaved optimum

- Convexity also enables problems to be solved easily using linear programming methods

Alternative Measures of Risk: Worst-case scenario analysis

-

This is the outcome of a worst-case scenario analysis (Boudoukh et al)

-

Can consider as high percentile of distribution of losses exceeding VaR

- Whereas CVaR is expected value of this distribution

-

WCSA is also coherent, produces risk measures bigger than CVaR

Alternative Measures of Risk: SPAN

-

Standard-Portfolio Analysis Risk (SPAN, CME)

-

Considers 14 scenarios (moderate/large changes in vol, changes in price) + 2 extreme scenarios

-

Positions revalued under each scenario, and the risk measure is the maximum loss under the first 14 scenarios plus 35% of the loss under the two extreme scenarios

-

SPAN risk measure can be interpreted as maximum of expected loss under each of 16 probability measures, and is therefore coherent

Alternative Measures of Risk: Other Methods

- The Semistandard Deviation

- The Drawdown

-

Try to verify wether the following popular risk measures are coherent measures of risk or not

- Standard deviation

- VaR

- CVaR

- WCSA

- SPAN

Stress-Testing

What are stress tests?

-

STs are procedures that gauge vulnerability to 'what if' events

- Used for a long time, especially to gauge institutions IR exposure

-

Early stress tests 'back of envelope' exercises

-

Major improvements in recent years, helped by developments in computer power

-

Modern ST much more sophisticated than predecessors

Modern stress testing

-

ST received a major boost after 1998

-

Realisation that it would have helped firms to protect themselves better in '98 crisis'

-

ST very good for quantifying possible losses in non-normal situations

- Breakdowns in normal correlation relationships

- Sudden decreases in market liquidity

- Handling concentration risks

- Handling macroeconomic risks

-

STs versus probabilistic aprpoaches

- STs are a natural complement to probabilistic approaches

- VaR and CVaR good on prob side, but poor on 'what if'

- STs poor on prob side, but good on 'what if'

- STs not designed to answer prob questions

Categories of STs

- Different types of event (normal, extreme, etc.)

- Different types of risk (market, credit, etc.) and risk factors (equity risks, yield curve, etc.)

- Different country/region factors

- Different methodologies (scenario analysis, factor push, etc.)

- Different assumptions (relating to yields, stock markets, etc.)

- Different books (trading, banking)

- Differences in level of test (firmwide, business unit, etc.)

- Different instruments (equities, futures, options, etc.)

- Differences in complexity of portfolio

Main types of stress test

-

Scenario ('what if') analysis

- Analyse impact of specific scenario on our financial position

-

Mechanical stress tests

- Evaluate a number of math/stat defined scenarios (e.g., defined in terms of movements in underlying risk factors)

- Work through these in a mechanical way

Uses of stress tests

-

Can provide good risk information

- Good for communicating risk information

- Results from STs readily understandable

- But must avoid swamping recipients with too much data

-

Can guide decision making

- Allocation of capital, setting of limits, etc.

-

ST can help firms to design systems to deal with bad events

- Can check on modelling assumptions, contingency planning, etc.

Benefits of stress testing

-

Good for showing hidden vulnerability

-

Can improve on VaR type information in various ways

- Since stress events unlikely, VaR systems unlikely to reveal much about them

- Short VaR holding period will often be too short to reveal full impact of stress event

- Stress tests better at handling non-linearities that arise in stress situations

- Stress test can take account of unusual features in a stress scenario

-

Can more easily identify a firm’s breaking point

- Identify lethal conjunctions of events, etc.

-

Can give a clearer view about dangerous scenarios

- This helps in deciding they might be handled

-

Stress tests particularly good for identifying and handling liquidity exposures

- Loss of liquidity a key feature of crises

-

Stress tests also very good for handling large market moves

- Market crashes, etc

-

Stress tests good for handling possible changes in market volatility

- Help identify possible risks in overall risk exposure, gains/losses on derivatives positions, etc

-

Stress tests for good for handling exposure to correlation changes

- These very significant in major crises

-

Stress tests good for highlighting hidden weaknesses in risk management system

- Identify hidden 'hot spots' in portfolio

Difficulties with STs

-

Much less straightforward than it looks

-

Based on large numbers of decisions about

- choice of scenarios and risk factors

- how risk factors should be combined

- Ranges of values to be considered

- Choice of time horizon, etc.

-

ST results completely dependent on chosen scenarios and how they are modelled

- When portfolios are complex, can be very difficult even to identify the risk factors to look at

-

Difficulty of working through scenarios in a consistent way

-

Need to follow through scenarios, and consequences can be very complex and can easily become unmanageable

-

Need to take account of interrelationships in a reasonable way

- Need to account for correlations

-

Need to take account of zero arbitrage relationships

- Eliminate 'impossible' events?

-

ST & Prob Analysis

-

Interpretation of ST results

-

STs do not address prob issues as such

-

Hence, always an issue of how to interpret results

-

This implies some informal notion of likelihood

- If prob is negligible, result of ST might have little bearing

- If prob is significant, result might be importan

-

-

Integrating ST and prob analysis

-

Can integrate ST and prob analysis using Berkowitz’s coherent framework

- Do prob analysis – VaRs at different cls

- Do ST analysis

- Assign probs to ST scenarios

- Integrate these outcomes into prob analysis

-

This approach is judgemental, but does give an integrated analysis of both probs and STs

-

Choosing scenarios

-

moving key variables one at a time

-

using historical scenarios

-

creating prospective scenarios

-

reverse stress tests

Stylised Scenarios

-

Simulated movement in major IRs, stock prices, etc.

- Can be expressed in absolute changes or multiple-of-std changes

-

Long been used in ALM analysis

-

Problem is to keep scenarios down in number, without missing plausible important ones

Actual Historical Scenarios

-

Based on actual historical events

-

Advantages

- They have plausibility because they have occurred

- Readily understood

-

Can choose scenarios from a catalogue, which might include

-

Moderate market changes

-

More extreme events such as reruns of major crashes

- A good guide is to choose scenarios that are of same order of magnitude as worst-case events in our data sets

-

Hypothetical One-Off Events

-

These might be natural, political, legal, major defaults, counterparty defaults, economic crises, etc.

-

Can obtain them by alternate history exercises

- Rerun historical scenarios and ask what might have been

-

Can also look to historical record to guide us in working out what such scenarios might look like

Evaluating scenarios

-

Having specified our set of scenarios, we then need to evaluate their effects

-

Key is to get an understanding of the sensitivities of our positions to changes in the risk factors being changed

-

Easy for some positions

- E.g., linear FX, stock, futures positions

-

Harder for options

- Must account for (changing) sensitivities to underlying

- Might need approximations, e.g., delta-gamma

-

Must pay particular attention to impact of stylised events on markets

-

Very unwise to assume that liquidity will remain strong in a crisis

-

If futures contracts are used hedges, must also take account of funding implications

-

Otherwise well-hedged positions can unravel because of interim funding

Mechanical ST

-

These approaches try to reduce subjectivity of SA and put ST on firmer foundation

- Instead of using subjective scenarios, we use math/stat generated scenarios

- Work through these in a systematic way

- Work out most damaging conjunctions of events

-

Mechanical ST more systematic and thorough than SA, but also more intensive

Factor Push Analysis

-

Push each price or factor by a certain amount

- Better to push factors than prices, due to varying sensitivities of prices to factors

-

Specify confidence level

-

Push factors up/down by

-

Revalue positions each time,

-

Work out most disadvantageous combination

-

This gives us worst-case maximum loss (ML)

-

FP is easy to program, at least for simple positions

-

Does not require very restrictive assumptions

-

Can be modified for correlations,

-

Results of FP are coherent

-

If we are prepared to make further assumptions, FP can also give us an indication of likelihoods

- ML estimates tend to be conservative estimates of VaR

- Can adjust the

-

But FP rests on assumption that highest losses occur when factors move most, and this is not true for some positions

Maximum Loss Optimisation

-

Solution to this problem is to search over interim values of factor ranges

-

This is MLO

-

MLO is more intensive, but will pick up high losses that occur within factor ranges

- E.g., as with losses on straddles

-

MLO is better for more complex positions, where it will uncover losses that FP might miss

Backtesting

Backtesting

-

Backtesting is the process to compare systematically the VaR forecasts with actual returns.

- It should detect weaknesses in the models and point to areas for improvement

- It is one of the reasons that bank regulators allow banks to use their own risk measures to determine the amount of regulatory capital

-

Backtesting compares the daily VaR forecast with the realized profit and loss (P&L) the next day.

- It is recorded as an exception if the actual loss is worse than the VaR

- The risk manager counts the number if exceptions

-

Trading outcome

- Actual portfolio

- Hypothetical portfolio (no intraday trading, no fee income)

- The Basel framework recommends using both hypothestical and actual trading outcomes in backtests

Preparing Data: Obtaining Data

-

Need to obtain suitable P/L data

-

Accounting (e.g., GAAP) data often inappropriate because of smoothing, prudence etc

-

Want P/L data that reflect market risks taken

- Need to eliminate fee income, bid-ask profits, P/L from other risks taken (e.g., credit risks), unrealised P/L, provisions against future losses

-

Need to clean data or use hypothetical P/L data (obtained by revaluing periods from day to day)

Preparing Data: Draw up backtest chart

- Good to plot P/L data against risk bounds over time

- Chart good indicator of possible problems

- over-estimation of risks

- under-estimation of risks

- bias

- excessive smoothness in risk bounds, etc.

Preparing Data: Get to know data

-

Draw up summary statistics

- Mean, std, skewness, kutosis, min, max, etc.

-

Draw up QQ charts

- Plots of predicted vs. empirical quantiles

-

Draw up charts of predicted vs. empirical probs

-

Shape of these curves indicates whether supposed pdf fits the data – very useful diagnostic

Preparing Data: Standardise Data

-

P/L data typically random

-

Porfolios and dfs often change from day to day

-

How to compare P/L data if underlying pdfs change?

-

Good practice to map P/L data to predicted percentile

- If observation falls 90% percentile of predicted P/L distribution, then mapped value is 0.90

-

This standardizes data to make observations comparable given changes in pdf or porfolio

Measuring Exceptions

-

Binomial Distribution

- Probability mass function

- Mean:

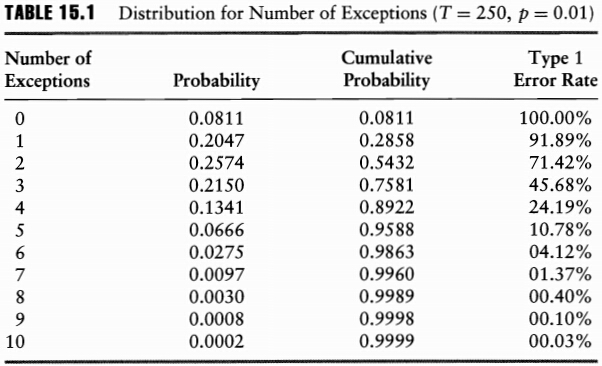

- Example: For instance, we want to know what is the probability of observing

So, we would expect to observe 8.1% of samples with zero exceptions under the null hypothesis. We can repeat this calculation with different values for

- Normal Approximation

-

Decision Rule for Backtests

- Type 1 errors: kill the good guy

- Type 2 errors: miss the bad guy

- Power of a test is one minus the type 2 error rate

- Most statistical tests fix the type 1 error rate, say at 5%, and structure the test so as to minimize the type 2 error rate, or maximize the test's power

Example

Consider a VaR measure over a daily horizon defined at the 99% level of confidence

- A higher cutoff point would lower the type 1 error rate

- It is more likely to miss VaR models that are misspecified with higher cutoff points

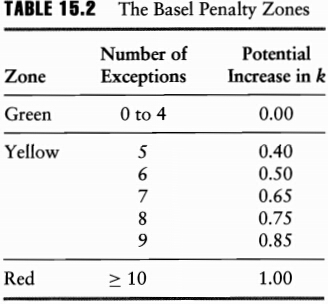

Basel Rule for Backtests

- The normal multiplier

- After an incursion into the yellow zone

- An incursion into the red zone generates an automatic, non-discretionary penalty

Evaluation of Backtesting

-

Exception tests focus only on the frequency of occurrences

-

It ignores the time pattern of losses

Advanced Risk Models: Multivariate

The Big Idea

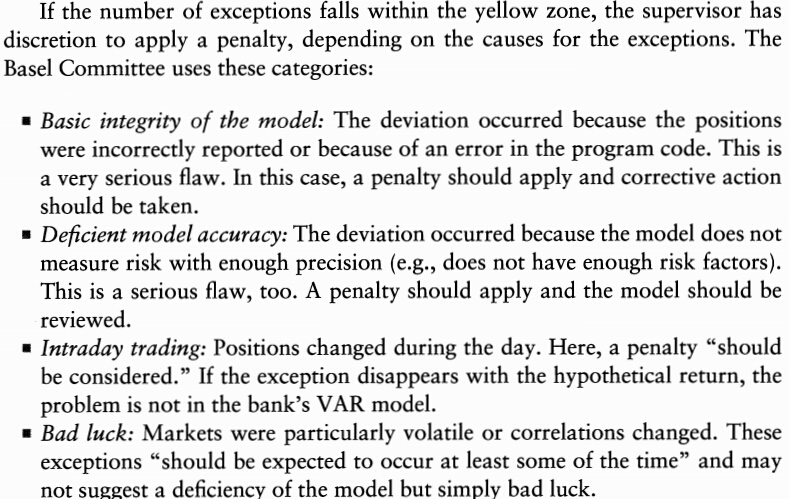

Components of a Multivariate Risk Modeling Systems

-

Risk system

- portofolio position system

- risk factor modeling system

- aggregation system

-

Describe joint movements in the risk factors

- specify an analytical distribution

- take the joint distribution from empirical observations

-

Aggregation: VaR methods

- delta-normal method

- historical simulation method

- Monte Carlo simulation method

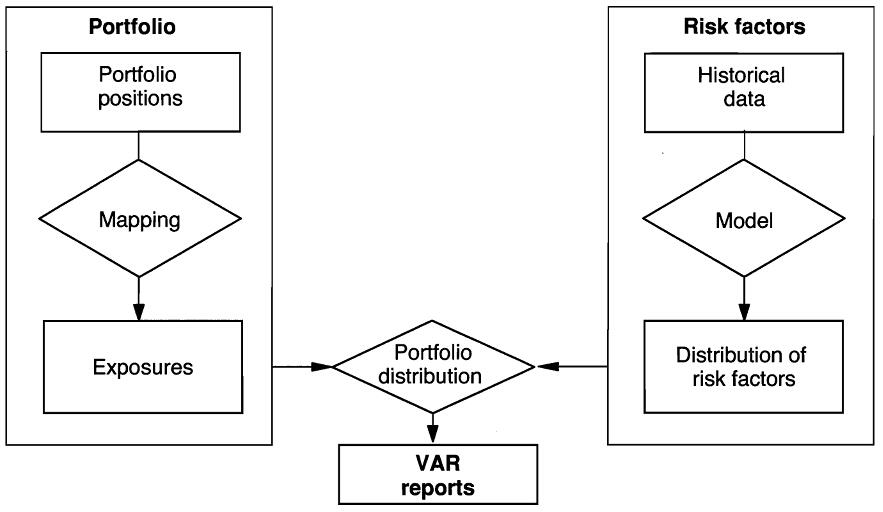

Risk Mapping

Introduction

-

Have assumed so far that each position has its own risk factor, which we model directly

- Distinguish between positions and risk factors

-

However, it is not always possible or desirable to model each position as having its own risk factor

-

Might wish to map our positions onto some smaller set of risk factors

- Might wish to map

- Might wish to map

Reasons for mapping

-

Might not have enough data on our positions

- E.g., might have small runs of Emerging Market data

- Map to risk factors for which we do have data

-

Might wish to cut down on the dimensionality of our covariance matrices

- This is important!

- With

- As

-

Need to keep dimensionality down to avoid computational problems too – rank problems, etc.

Stages of mapping

-

Construct a set of benchmark instruments or factors

- Might include key bonds, equities, etc.

-

Collect data on their volatilities and correlations

-

Derive synthetic substitutes for our positions, in terms of these benchmarks

- This substitution is the actual mapping

-

Construct VaR/CVaR of mapped porfolio

-

Take this as a measure of the VaR/CVaR of actual portfolio

Selecting Core Instruments

-

Usual approach to select key core instruments

- Key equity indices, key zero bonds, key currencies, etc.

-

Want to have a rich enough set of these proxies, but don’t want so many that we run into covariance matrix problems

-

RiskMetrics core instruments

- Equity positions represented by equivalent amounts in key equity indices

- Fixed income positions by represented by combinations of cashflows of a limited number of maturities

- FX positions represented by relevant amounts in `core' currenicies

- Commodity positions represented by amounts of selected standardised futures positions

Mapping with Principal Components

-

Can use PCA to identify key factors

-

Small number of PCs will explain most movement in our data set

-

PCA can cut down dramatically on dimensionality of our problem, and cut down on number of covariance terms

- E.g., with 50 original variables, have

- With 3 PCs, have only 3 separate covariance terms

- E.g., with 50 original variables, have

Mapping Positions to Risk Factors

-

Most positions can be decomposed into primitive building blocks

-

Instead of trying to map each type of position, we can map in terms of portfolios of building blocks

-

Building blocks are

- Basic FX

- Basic equity

- Basic fixed-income

- Basic commodity

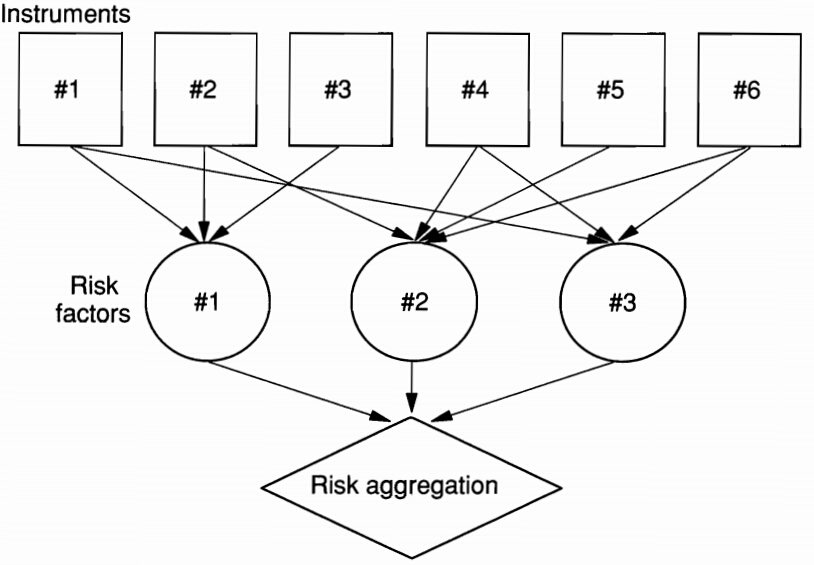

A General Example of Risk Mapping

- Replace each if the

- Aggregate the

- Derive the distribution of the portfolio return

Example: Mapping with Factor Models

-

Decompose stock return

- a constant term (not important fot risk management purpose)

- a component due to the market

- a residual term

-

The portfolio return

- Mean:

- Variance:

- For equally weighted portfolio:

- The mapping:

- Mean:

-

This approach is useful especially when there is no return history

Example: Mapping with Fixed-Income Portfolios

-

Risk-free bond portfolio

-

maturity mapping: replace the current value of each bond by a position on a risk factor with the same maturity

-

duration mapping: maps the bond on a zero-coupon risk factor with a maturity equal to the duration of the bond

-

cash flow mapping: maps the current value of each bond payment on a zero-coupon risk factor with maturity equal to the time to wait for each cash flow

-

Corporate bond portfolio

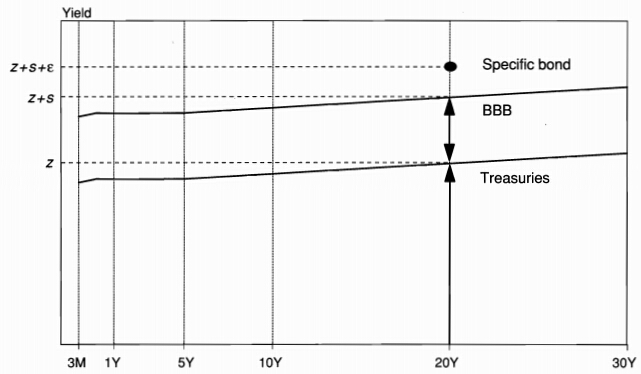

- Decomposition:

- the movement in the value of bond price

- the portfolio:

- aggregation:

- Variance:

Choice of Risk Factors

It should be driven by the nature of the portfolio:

-

portfolio of stocks that have many small positions well dispersed across sectors

-

portfolios with a small number of stocks concentrated in one sector

-

an equity market-neutral portfolio

-

Mapping Complex Positions

- Complex positions are handled by apply financial engineering theory

- Reverse-engineer complex positions into portfolios of simple positions

- Map complex positions in terms of collections of synthetic simple positions

- Some examples, using FE/FI theory:

- Coupon-paying bonds: can regard as portfolios of zeros

- FRAs: equivalent to spreads in zeros of different maturities

- FRNs: equivalent to a zero with maturity equal to period to next coupon payment (because it reprices at par)

- Vanilla IR swaps: equivalent to portfolio long a fixed-coupon bond and short a FRN

- Structured notes: equivalent to combinations of IR swaps and conventional FRNs

- FX forwards: equivalent to spread between foreign currency bond and domestic currency bond

- Commodity, equity and FX swaps: combinations of spread between forward/futures and bond position

Dealing with Optionality

-

All these positions can be mapped with linear based mapping systems because of their being (close to) linear

-

These approaches not so good with optionality

- Non-linearity of options positions can lead to major errors in mapping

-

With non-linearity, need to resort to more sophisticated methods, e.g., delta-gamma and duration-convexity

Joint Distribution of Risk Factors

-

Copula is a function of the values of the marginal distributions

-

Sklar's theorem: For any joint density there exists a copula that links the marginal densities:

-

This result enables us to construct joint density functions from the marginal density functions and the copula function

-

Takes account of dependence structure

-

To model joint density function, specify marginals, choose copula, and then apply copula function

Common Copulas

-

Independence (product) copula$ = uv$

- Good for independent random variables

-

Minimum copula

- Good for comonotonic variables

-

Maximum copula

- Good for countermonotonic variables

-

Gaussian copulas

- For multi-variable normality, does not have closed form copula functions

-

t-copulas

- For multi-variable

- For multi-variable

-

Gumbel copulas, Archimedean copulas

-

Extreme value copulas

- Arising from EVT

Tail Dependence

- Upper & lower conditional probabilities

-

When

-

Gives an idea of how one variable behaves in limit, given high value of another

Extreme Value Theory

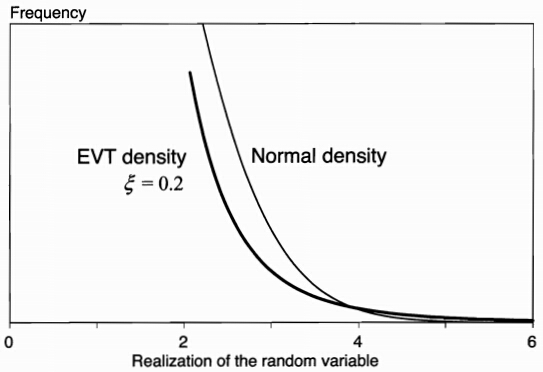

Peaks Over Threshold Approach & the GP Distribution

The limit distribution for values

-

It is called the generalized Pareto (GP) distribution

- EVT distribution is only asymptotically valid (i.e., as

-

It subsumes other distributions as special cases

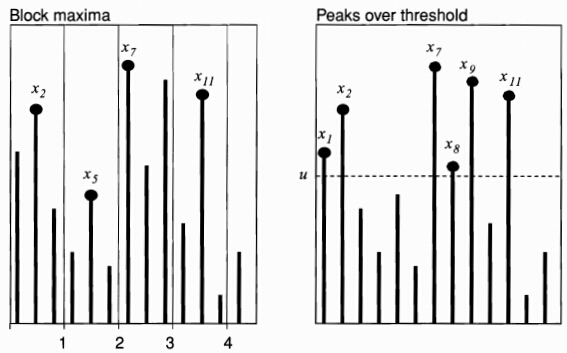

Block Maxima vs. Peaks over Threshold

- Block maxima approach: group the sample into successive blocks, from which each maximum is identified.

- Generalized extreme value (GEV) distribution

EVT vs. Normal Densities

VaR and EVT

Close-form solutions for VaR and CVaR rely heavily on the estimation of

-

Maximum likelihood

- define a cutoff point

- only consider losses beyond

- define a cutoff point

-

Method of moments

- fitting the parameters so that the GP moments equal the observed moments

-

Hill's estimator

- sort all observations from highest to lowest

- tail index is estimated from

- no theory tells how to choose

- we may plot

Problems with EVT

-

Estimates are sensitive to changes in the sample

-

Results depend on assumptions and estimation method

-

It relies on historical data

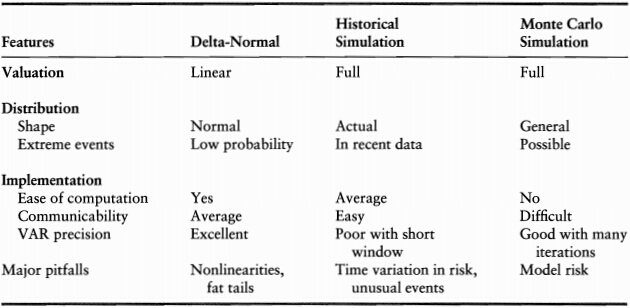

VaR Methods

Delta-Normal

-

Assumption

- portfolio exposures are linear

- risk factors are jointly normally distributed

-

The VaR

- portfolio return is normally distributed

- the portfolio variance:

- VaR is directly obtained from the standard normal deviate

- diversified VaR vs. undiversified VaR

-

Advantages & Drawbacks

- advantages: simple, closed-form, more precise, less sampling variability

- drawbacks: can not account for nonlinear effects (option), underestimate the occurrence of large observations (normal assumption)

Historical Simulation

- The Idea: replays a ''tape'' of history to current positions

- go back in time (e.g. over the past 250 days)

- project hypothetical factor values using the factor movements

- derive the portfolio values

- The VaR

- current portfolio value as function of current risk factors:

- sampling factor movements from the historical distribution:

- construct hypothetical factor values:

- current portfolio value:

- portfolio return:

- VaR is obtained from the difference between the average and the c-th quantile:

- current portfolio value as function of current risk factors:

- Advantages & Drawbacks

- advantages: no specific distributional assumption, intuitive

- drawbacks: its reliance on a short historical moving window to infer movements in market prices

Monte Carlo Simulation

-

The Idea

- is similar to the historical simulation method

- the movements in risk factors are generated from a prespecified distribution:

- the risk manager needs to specify the marginal distribution of risk factors as well as their copula

-

Advantages & Drawbacks

- advantages: most flexible

- drawbacks: computational burden, subject to model risk, sampling variability

-

It should converge to the delta-normal VaR if all risk factors are normal and exposures are linear

Comparison of Methods

Limitations of Risk Systems

Limitations of Risk Systems

-

Illiquid Assets

-

Losses Beyond VaR

-

Issues with Mapping

-

Reliance on Recent Historical Data

-

Procyclicality

-

Crowded Trades

课堂练习

A、B两只股票最近30周的周回报率如下所示(单位:1%):

A: -3,2,4,5,0,1,17,-13,18,5,10,-9,-2,1,5,-9,6,-6,3,7,5,10,10,-2,4,-4,-7,9,3,2;

B: 4,3,3,5,4,2,-1,0,5,-3,1,-4,5,4,2,1,-6,3,-5,-5,2,-1,3,4,4,-1,3,2,4,3。

某金融机构用A、B按1:1比例构造投资组合。

(a) 请分别计算组合在90%置信水平下的VaR和CVaR。

(b) 通过计算验证VaR和CVaR是否为相容风险度量(coherent measure of risk)。

课堂练习

某交易组合是由价值300,000美元的黄金投资和价值500,000美元的白银投资构成,假定以上两资产的日波动率分别为1.8%和1.2%,并且两资产回报的相关系数为0.6,请问:(

(a) 交易组合10天展望期的97.5%VaR为多少?

(b) 投资分散效应减少的VaR为多少?

课堂练习

假设我们采用100天的数据来对VaR进行回溯测试,VaR所采用的置信水平为99%,在100天的数据中我们观察到5次例外。如果我们因此而拒绝该VaR模型,犯第一类错误的概率为多少?