|

|

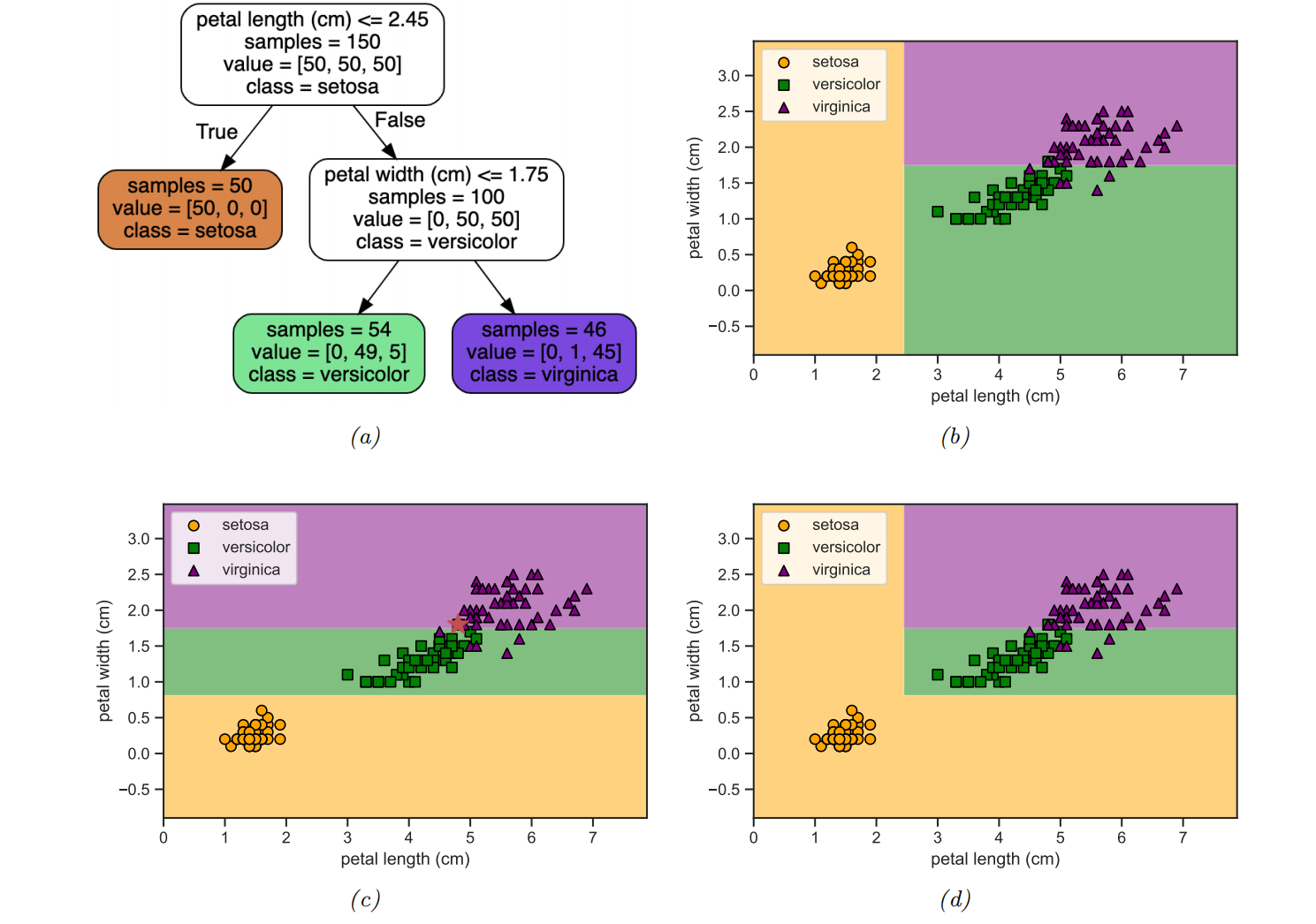

- For classification problems, the leaves contain a distribution over the class labels, rather than just the mean response.

Model fitting

- minimizing the following loss:

- it is not differentiable

- finding the optimal partitioning of the data is NP-complete

- the standard practice is to use a greedy procedure, in which we iteratively grow the tree one node at a time.

- three popular implementations: CART, C4.5, and ID3.

The big idea of greedy algorithms

|

|

|

|

|

|

|

|

where |

Given this, we can then compute the Gini index This is the expected error rate. To see this, note that |

|

|

Regularization

|

|

Pros and cons

|

|

Coding: Classification

|

|

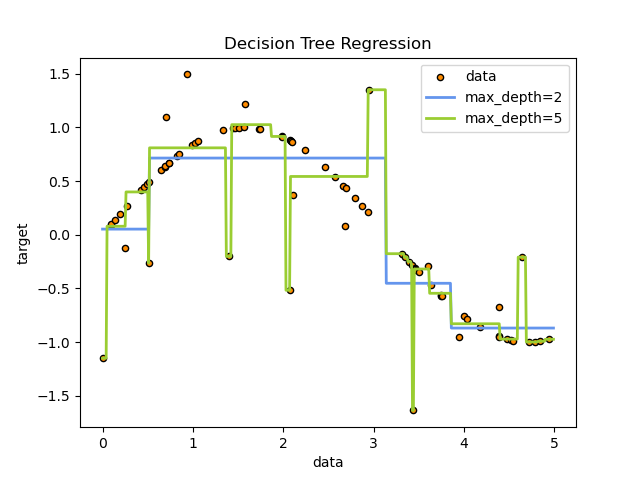

Coding: Regression

|

|

Ensemble learning

- Ensemble learning: reduce variance by averaging multiple (regression) models (or taking a majority vote for classifiers)

- The ensemble will have similar bias to the base models, but lower variance, generally resulting in improved overall performance

- For classifiers: take a majority vote of the outputs. (This is sometimes called a committee method.)

- suppose each base model is a binary classifier with an accuracy of

- Let

- Let

- We define the final predictor to be the majority vote, i.e., class 1 if

- suppose each base model is a binary classifier with an accuracy of

Stecking

- Stecking (stacked generalization): combine the base models, by using

- the combination weights used by stacking need to be trained on a separate dataset, otherwise they would put all their mass on the best performing base model.

Ensembling is not Bayes model averaging

- An ensemble considers a larger hypothesis class of the form

- the BMA uses

- The key difference

- in the case of BMA, the weights

- in the limit of infinite data, only a single model will be chosen (namely the MAP model). By contrast,

- the ensemble weights

- in the case of BMA, the weights

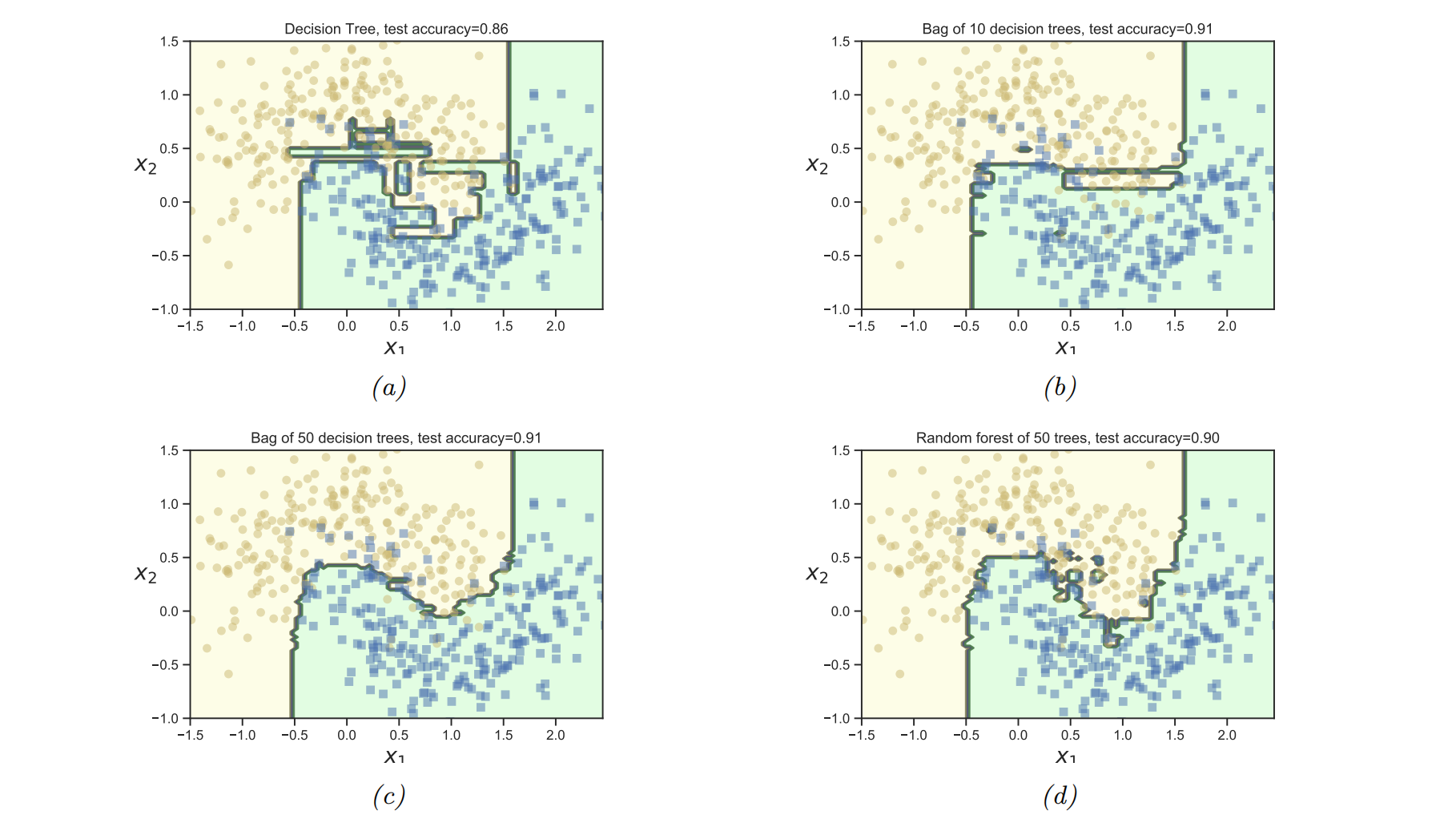

Bagging

- bagging ("bootstrap aggregating")

- This is a simple form of ensemble learning in which we fit

- this encourages the different models to make diverse predictions

- The datasets are sampled with replacement (a technique known as bootstrap sampling)

- a given example may appear multiple times, until we have a total of

- This is a simple form of ensemble learning in which we fit

-

The disadvantage of bootstrap

-

each base model only sees, on average,

-

The

-

We can use the predicted performance of the base model on these oob instances as an estimate of test set performance.

-

This provides a useful alternative to cross validation.

-

-

The main advantage of bootstrap is that it prevents the ensemble from relying too much on any individual training example, which enhances robustness and generalization.

-

Bagging does not always improve performance. In particular, it relies on the base models being unstable estimators (decision tree), so that omitting some of the data significantly changes the resulting model fit.

Random forests

Random forests: learning trees based on a randomly chosen subset of input variables (at each node of the tree), as well as a randomly chosen subset of data cases.

It shows that random forests work much better than bagged decision trees, because many input features are irrelevant.

Boosting

- Ensembles of trees, whether fit by bagging or the random forest algorithm, corresponding to a model of the form

- additive model: generalize this by allowing the

- We can think of this as a linear model with adaptive basis functions. The goal, as usual, is to minimize the empirical loss (with an optional regularizer):

-

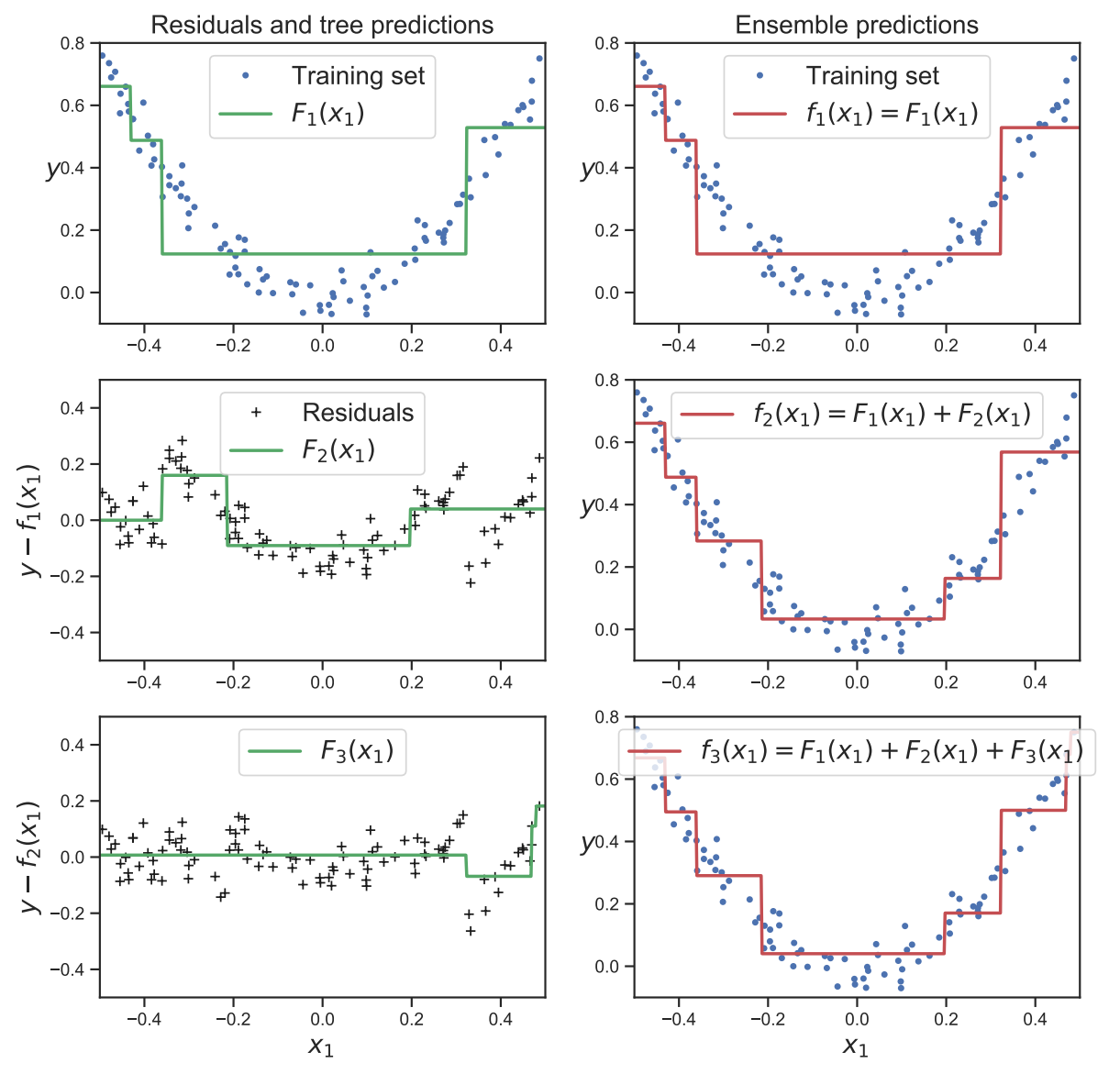

Boosting is an algorithm for sequentially fitting additive models where each

- first fit

- weight the data samples by the errors made by

- fit

- keep repeating this process until we have fit the desired number

- first fit

-

as long as each

- if

- we can boost its performance using the above procedure so that the final

- if

- boosting, bagging and RF

- boosting reduces the bias of the strong learner, by fitting trees that depend on each other

- bagging and RF reduce the variance by fitting independent trees

- In many cases, boosting can work better

Forward stagewise additive modeling

forward stagewise additive modeling: sequentially optimize the objective for general (differentiable) loss functions

We then set

Quadratic loss and least squares boosting

- squared error loss

- the

- We can minimize the above objective by simply setting

Exponential loss and AdaBoost

- binary classification, i.e., predicting

- assuming the weak learner computes

so

- the negative log likelihood is given by

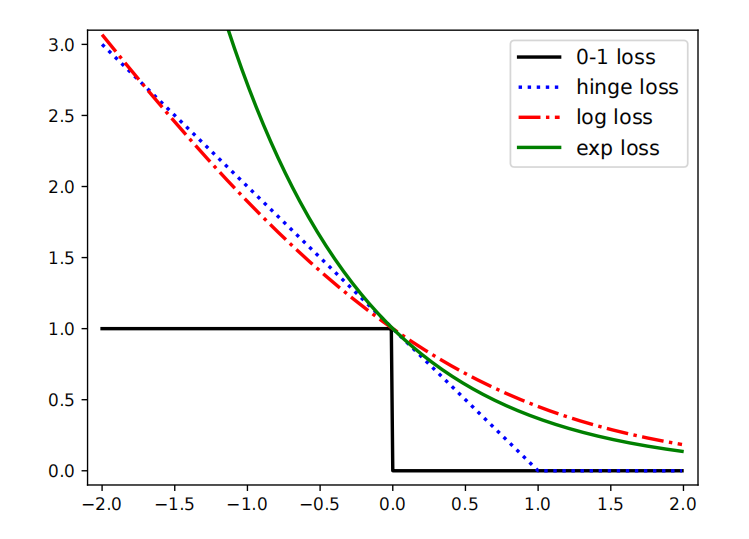

- we can also use other loss functions. In this section, we consider the exponential loss

- this is a smooth upper bound on the 0-1 loss.

- In the population setting (with infinite sample size), the optimal solution to the exponential loss is the same as for

- the exponential loss is easier to optimize in the boosting setting.

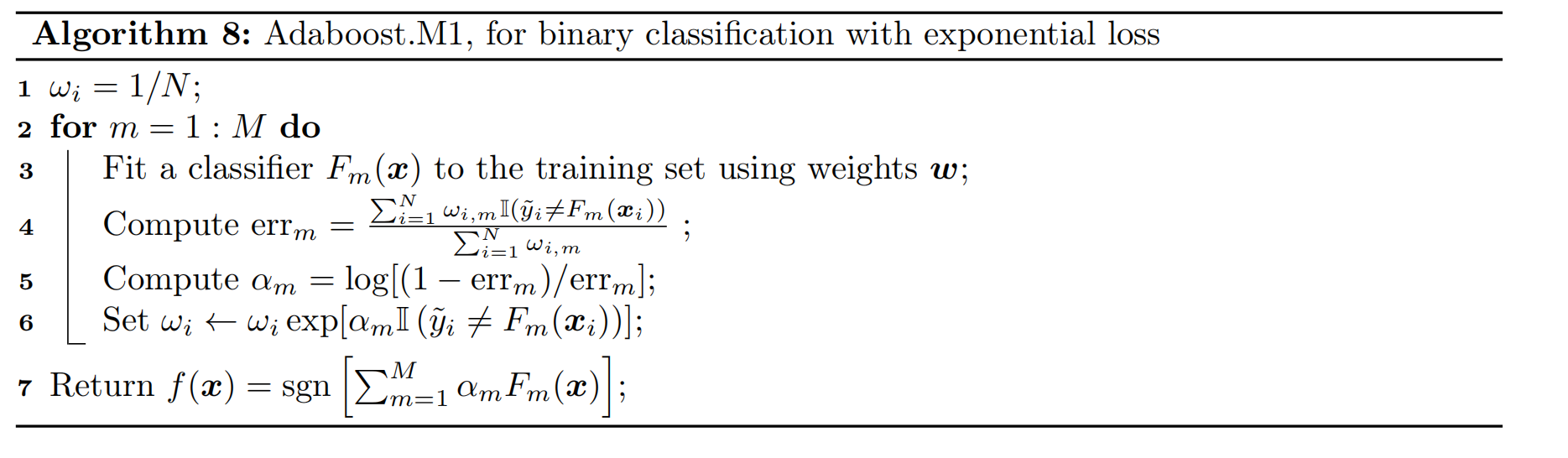

discrete AdaBoost

- At step

where

- the optimal function to add is

This can be found by applying the weak learner to a weighted version of the dataset, with weights

- Subsituting

where

- Therefore overall update becomes

- the weights for the next iteration, as follows:

- If

- Since the

Thus we see that we exponentially increase weights of misclassified examples. The resulting algorithm shown in Algorithm 8, and is known as Adaboost.

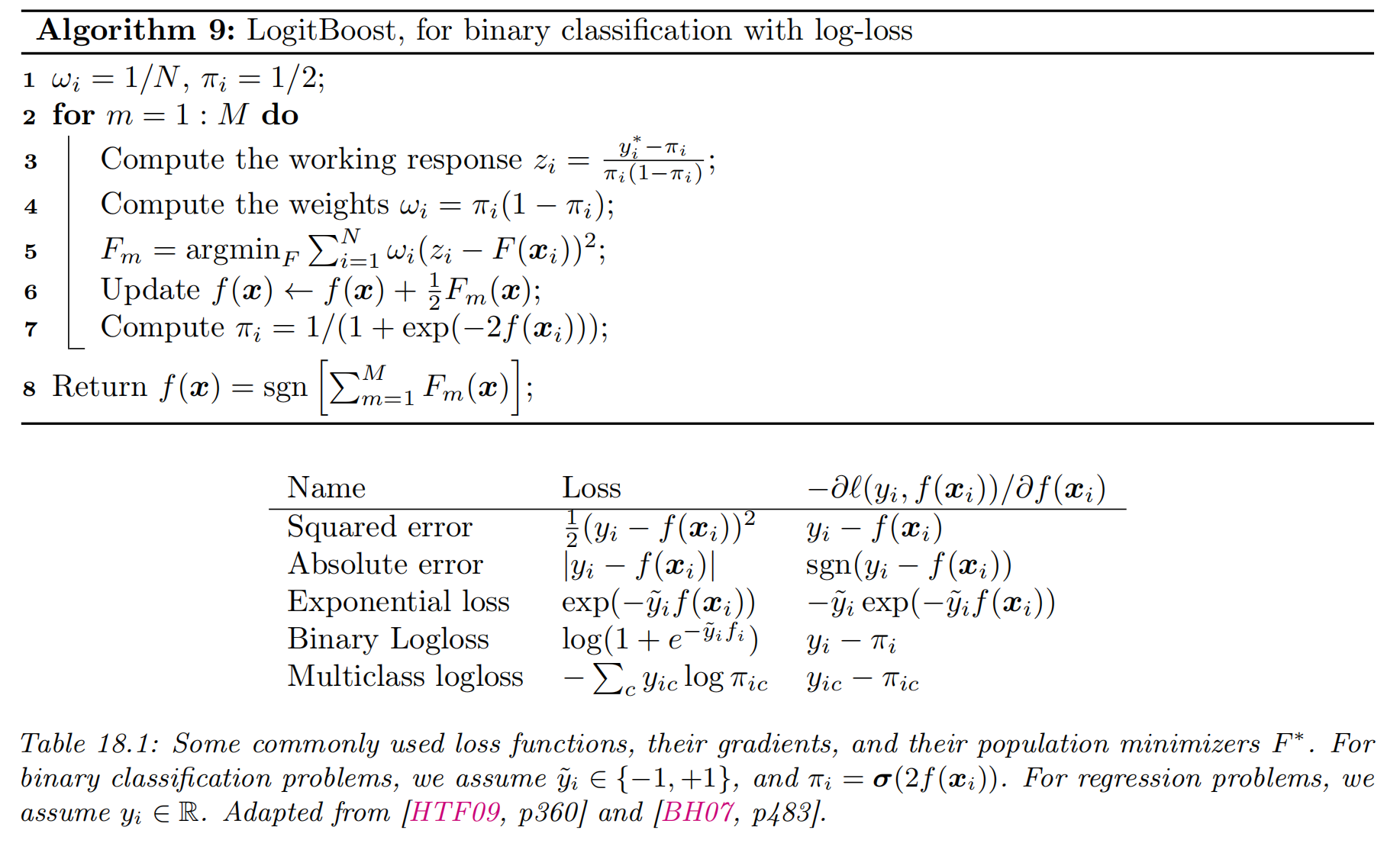

LogitBoost

- exponential loss puts a lot of weight on misclassified examples

- This makes the method very sensitive to outliers (mislabeled examples).

- In addition,

- A natural alternative is to use

- Furthermore, it means that we will be able to extract probabilities from the final learned function, using

- The goal is to minimze the expected log-loss, given by

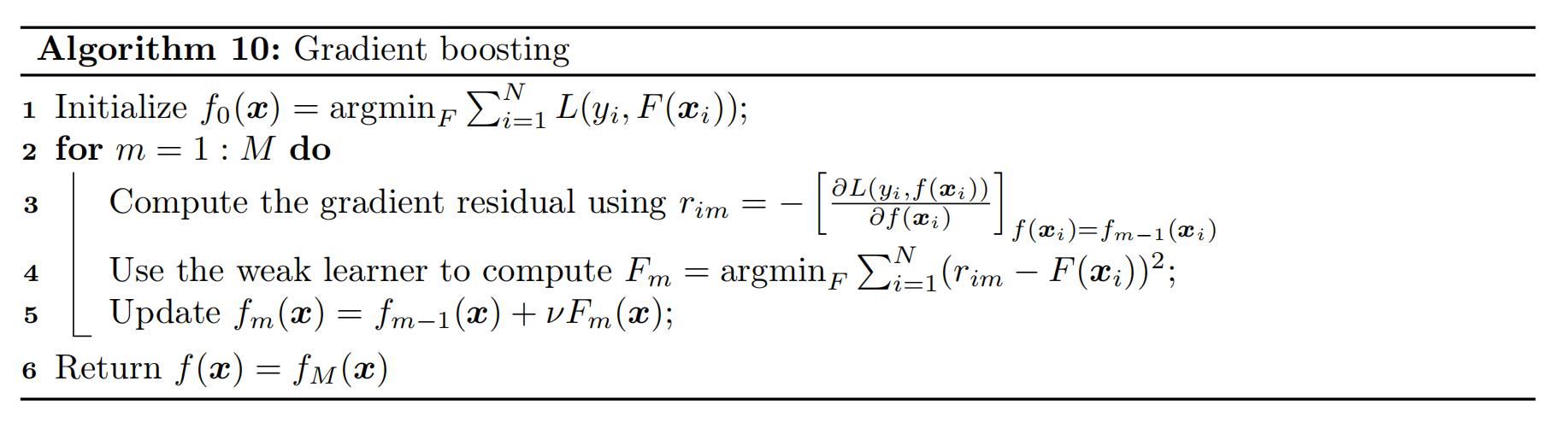

Gradient boosting

- to derive a generic version, known as gradient boosting. To explain this, imagine solving

- make the update

where

- fitting a weak learner to approximate the negative gradient signal. That is, we use this update

Gradient tree boosting

- In practice, gradient boosting nearly always assumes that the weak learner is a regression tree, which is a model of the form

- To use this in gradient boosting, we first find good regions

XGBoost

- XGBoost: (https://github.com/dmlc/xgboost), which stands for "extreme gradient boosting".is a very efficient and widely used implementation of gradient boosted trees:

- it adds a regularizer on the tree complexity

- it uses a second order approximation of the loss instead of just a linear approximation

- it samples features at internal nodes (as in random forests)

- it uses various computer science methods (such as handling out-of-core computation for large datasets) to ensure scalability.

- In more detail, XGBoost optimizes the following regularized objective

- the regularizer

where