- Machine Learning vs. Statistic Approaches

- Statistical approaches rely on foundational assumptions and explicit models of structure, such as observed samples that are assumed to be drawn from a specified underlying probability distribution.

- Machine learning seeks to extract knowledge from large amounts of data with no such restrictions -- “find the pattern, apply the pattern.”

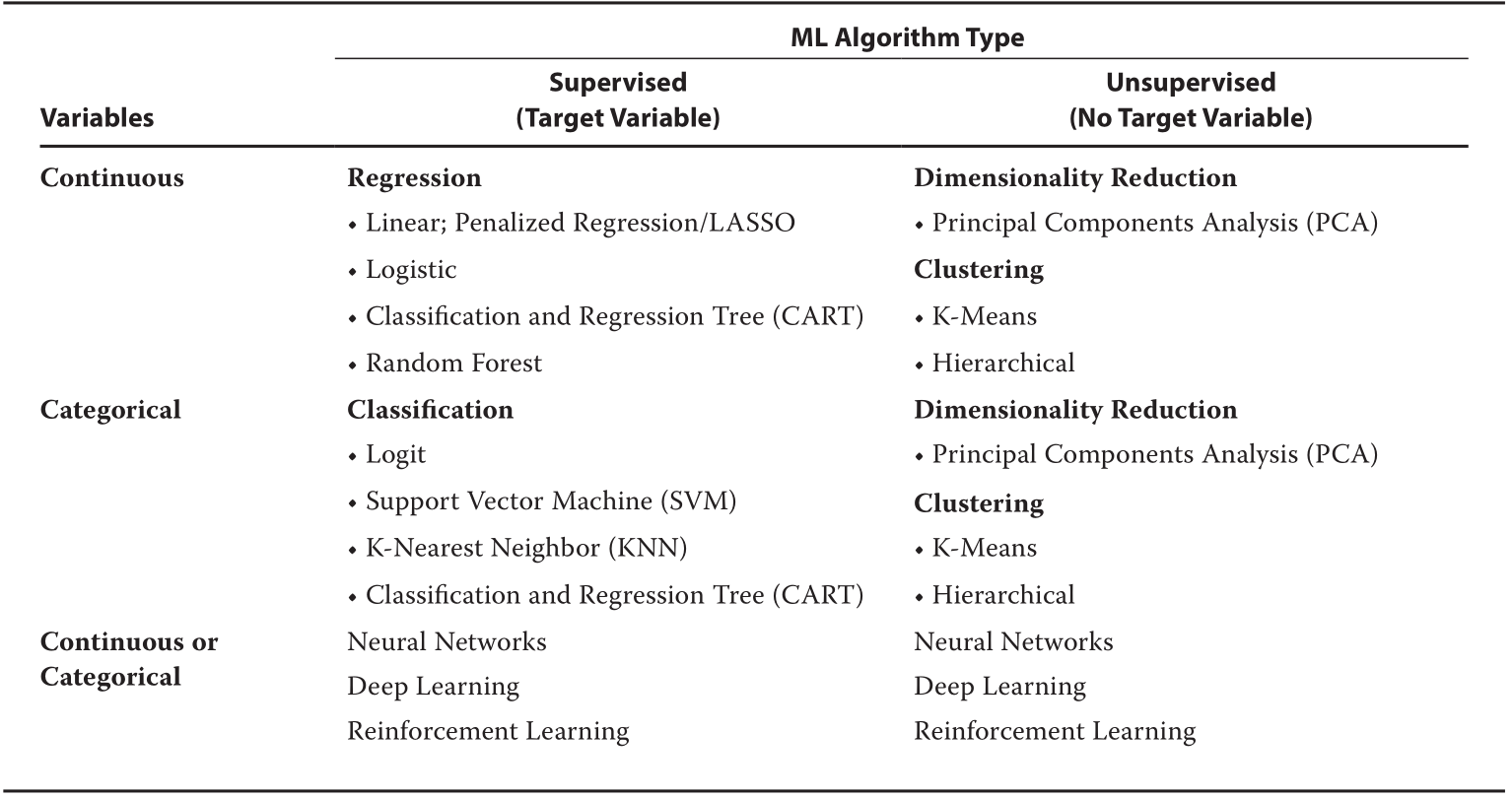

- Supervised Learning vs. Unsupervised Learning

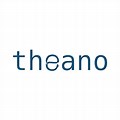

- Supervised learning involves ML algorithms that infer patterns between a set of inputs (the

- Unsupervised learning is machine learning that does not make use of labeled data. In unsupervised learning, inputs (

- Supervised learning involves ML algorithms that infer patterns between a set of inputs (the

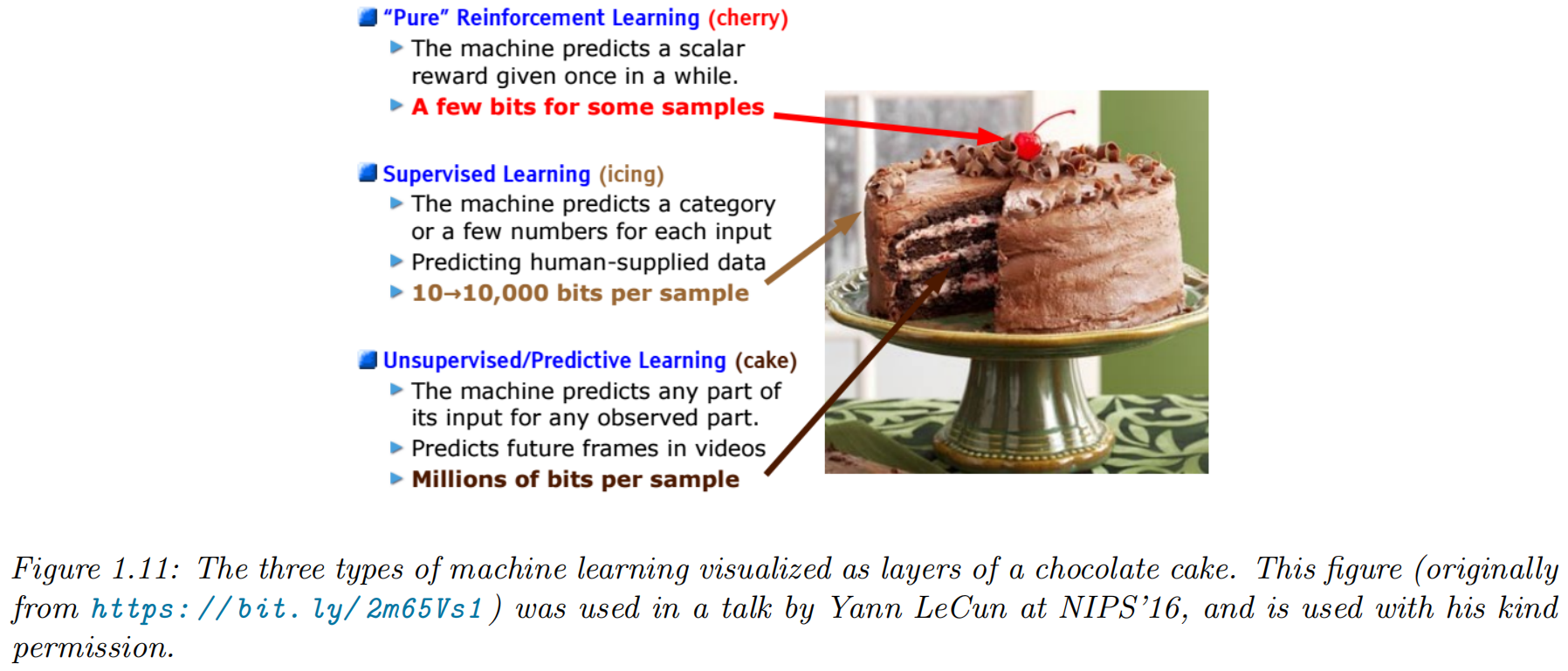

- Deep Learning and Reinforcement Learning

- In deep learning, sophisticated algorithms address highly complex tasks, such as image classification, face recognition, speech recognition, and natural language processing.

- reinforcement learning, a computer learns from interacting with itself (or data generated by the same algorithm).

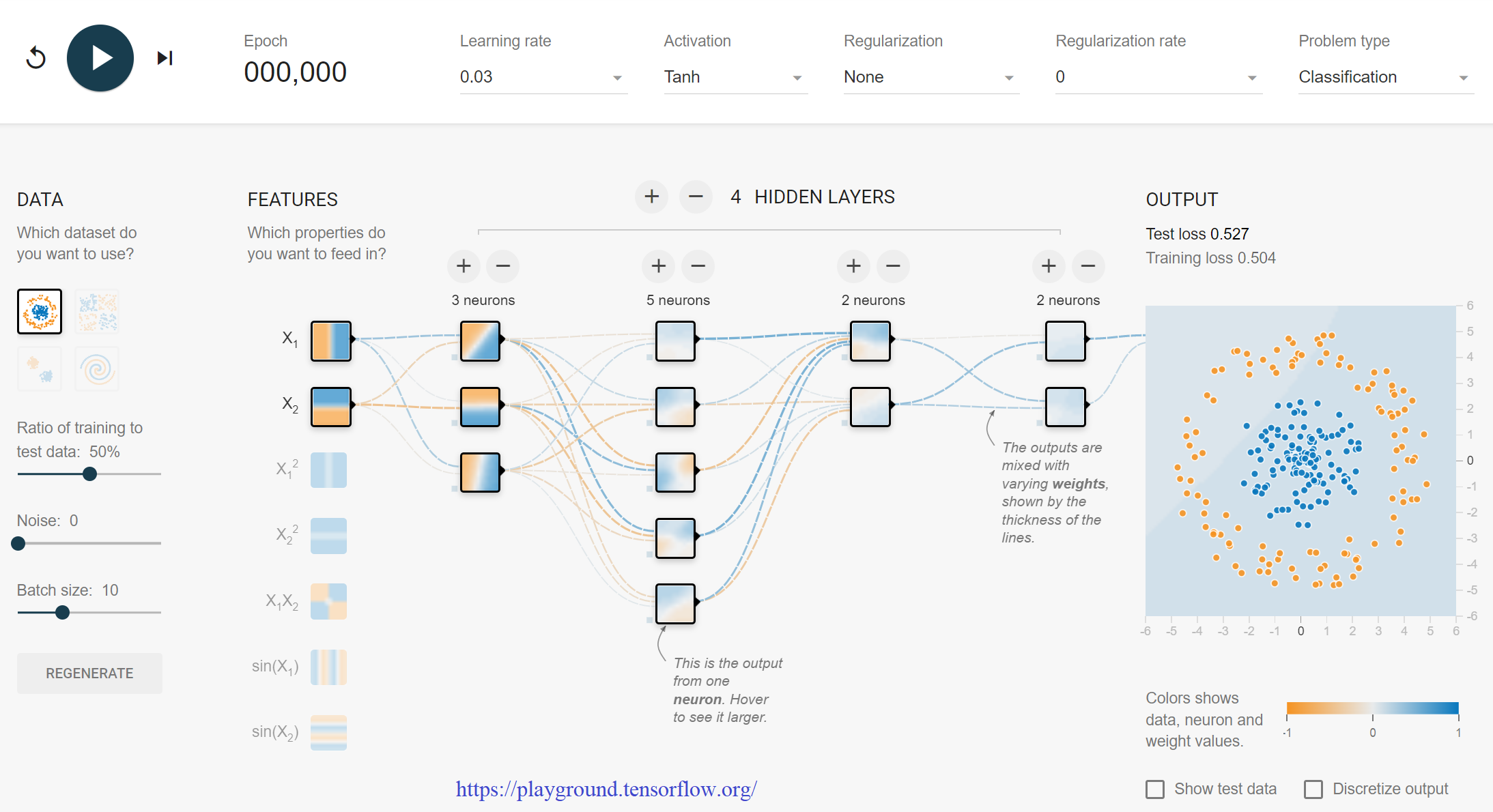

- Neural networks (NNs, also called artificial neural networks, or ANNs) include highly flexible ML algorithms that have been successfully applied to a variety of tasks characterized by non-linearities and interactions among features.

- Besides being commonly used for classification and regression, neural networks are also the foundation for deep learning and reinforcement learning, which can be either supervised or unsupervised.

Supervised Learning

- Definition

- The task

- The inputs

- The outputs

- The experince

- The task

Classification

classification problem

- the output space is a set of

- The problem is also called pattern recognition.

- binary classification: just two classes, often denoted by

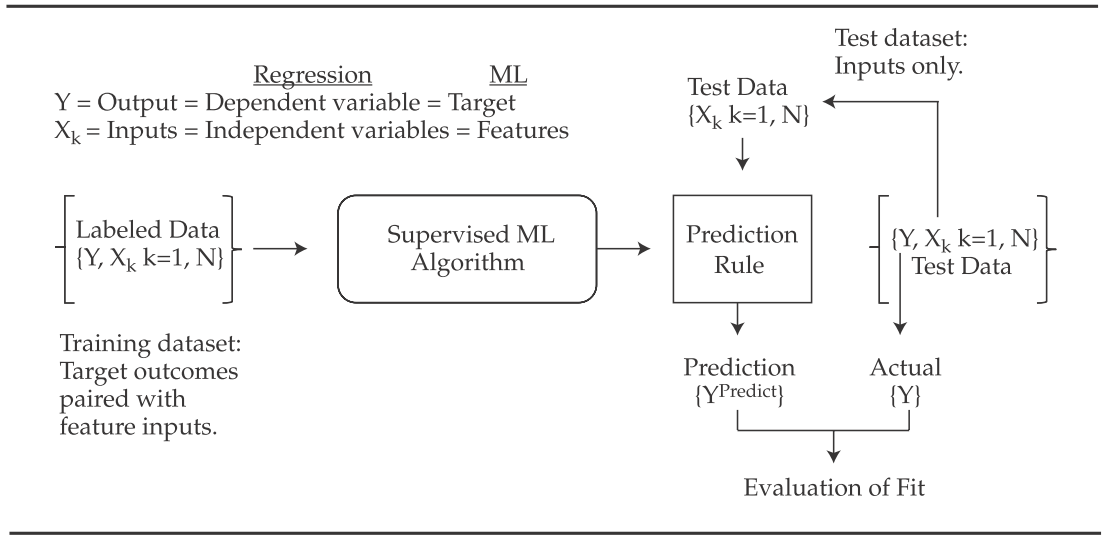

Example: classifying Iris flowers

Iris Flowers Classification

Image Classification

Exploratory data analysis

- exploratory data analysis: see if there are any obvious patterns

- tabular data with a small number of features: pair plot

- higher-dimensional data: dimension reduction first and then to visualize the data in 2d or 3d

Learning a classifier

- decision rule via a 1 dimensional (1d) decision boundary

- decision tree a more sophisticated decision rule involves a 2d decision surface

Empirical risk minimization

- misclassification rate on the training set:

- loss function:

- empirical risk: e the average loss of the predictor on the training set

- model fitting / training via empirical risk minimization

Uncertainty

We must avoid] false confidence bred from an ignorance of the probabilistic nature of the world, from a desire to see black and white where we should rightly see gray.

--- Immanuel Kant, as paraphrased by Maria Konnikova

- Two types of uncertainties

- epistemic uncertainty or model uncertainty: due to

lack of knowledge of the input-output mapping - aleatoric uncertainty or data uncertainty: due to intrinsic (irreducible) stochasticity in the mapping

- epistemic uncertainty or model uncertainty: due to

- We can capture our uncertainty using the following conditional probability distribution:

Maximum likelihood estimation

-

likelihood function:

-

log likelihood function

- Negative Log Likelihood: The average negative

- the maximum likelihood estimate (MLE):

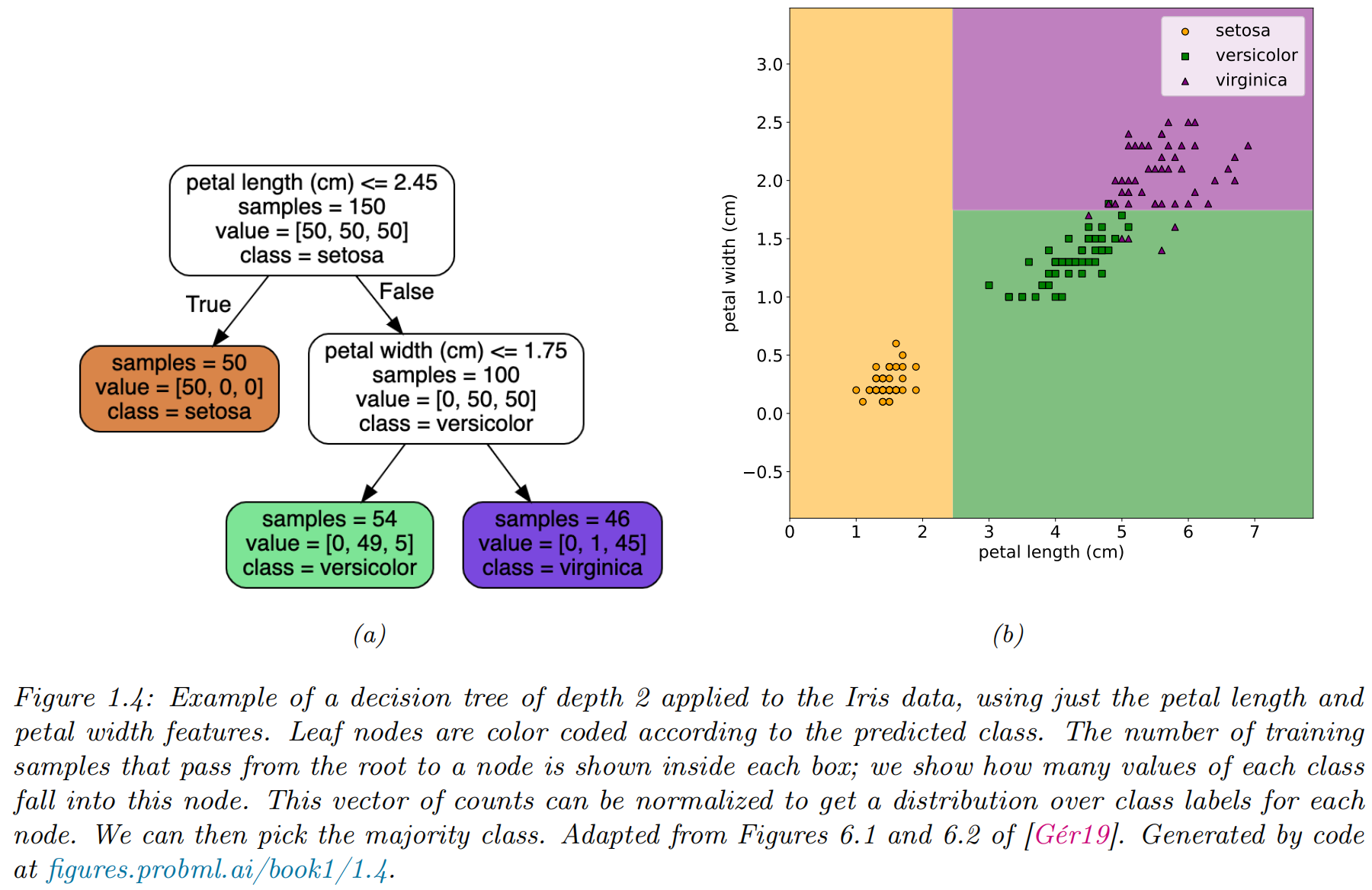

Regression

regression problem

- the output space:

- loss function: quadratic loss, or

- mean squared error or MSE:

- An Example

- Uncertainty: Guassian / Normal

- the conditional dist

- NLL

(simple) Linear regression

- functional form of model:

- parameters:

- least square estimator:

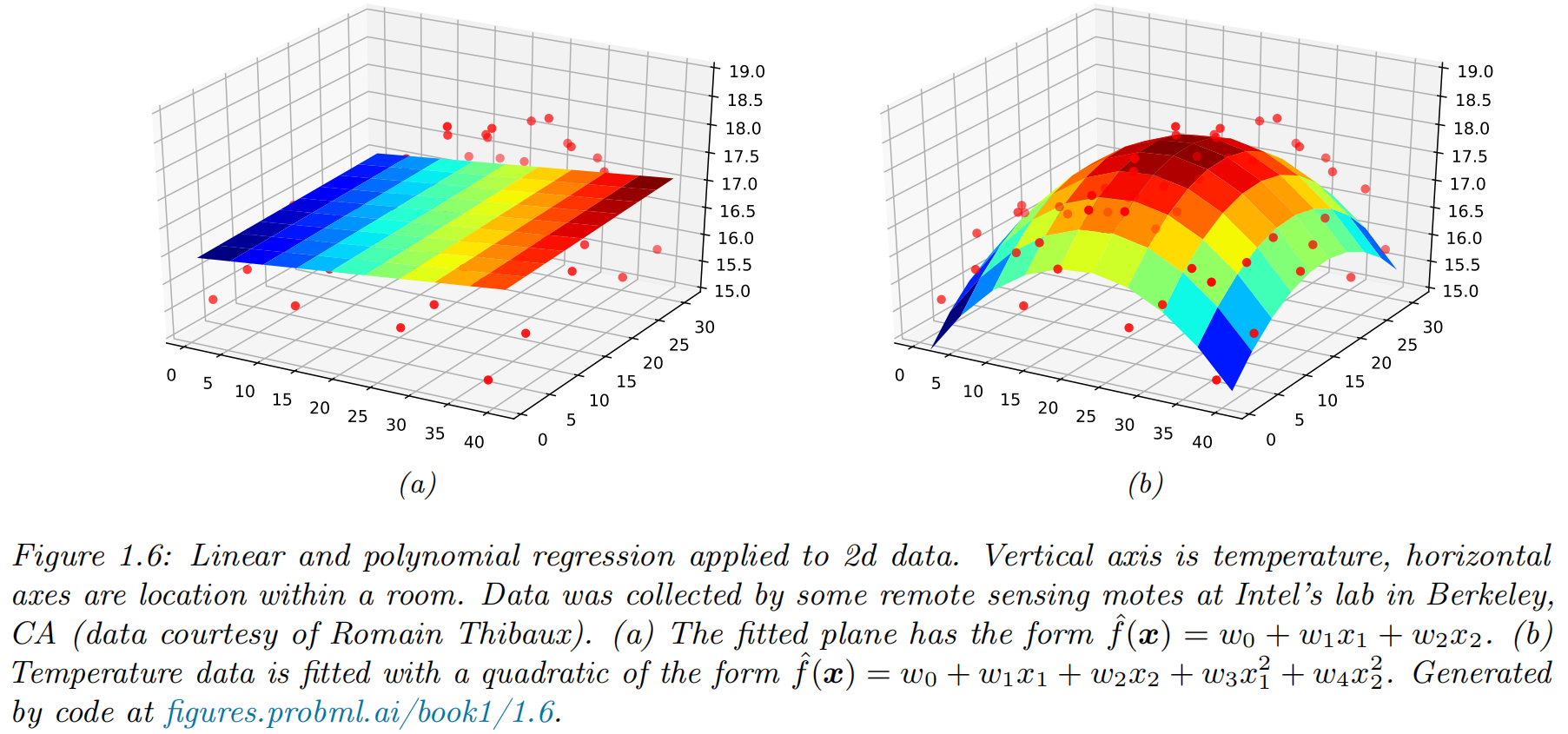

Polynomial regression

- functional form of model:

- feature preprocessing, or feature engineering

Deep neural networks

- deep neural networks (DNN): a stack of L nested functions:

- the function at layer

- the final layer:

- the learned feature extractor:

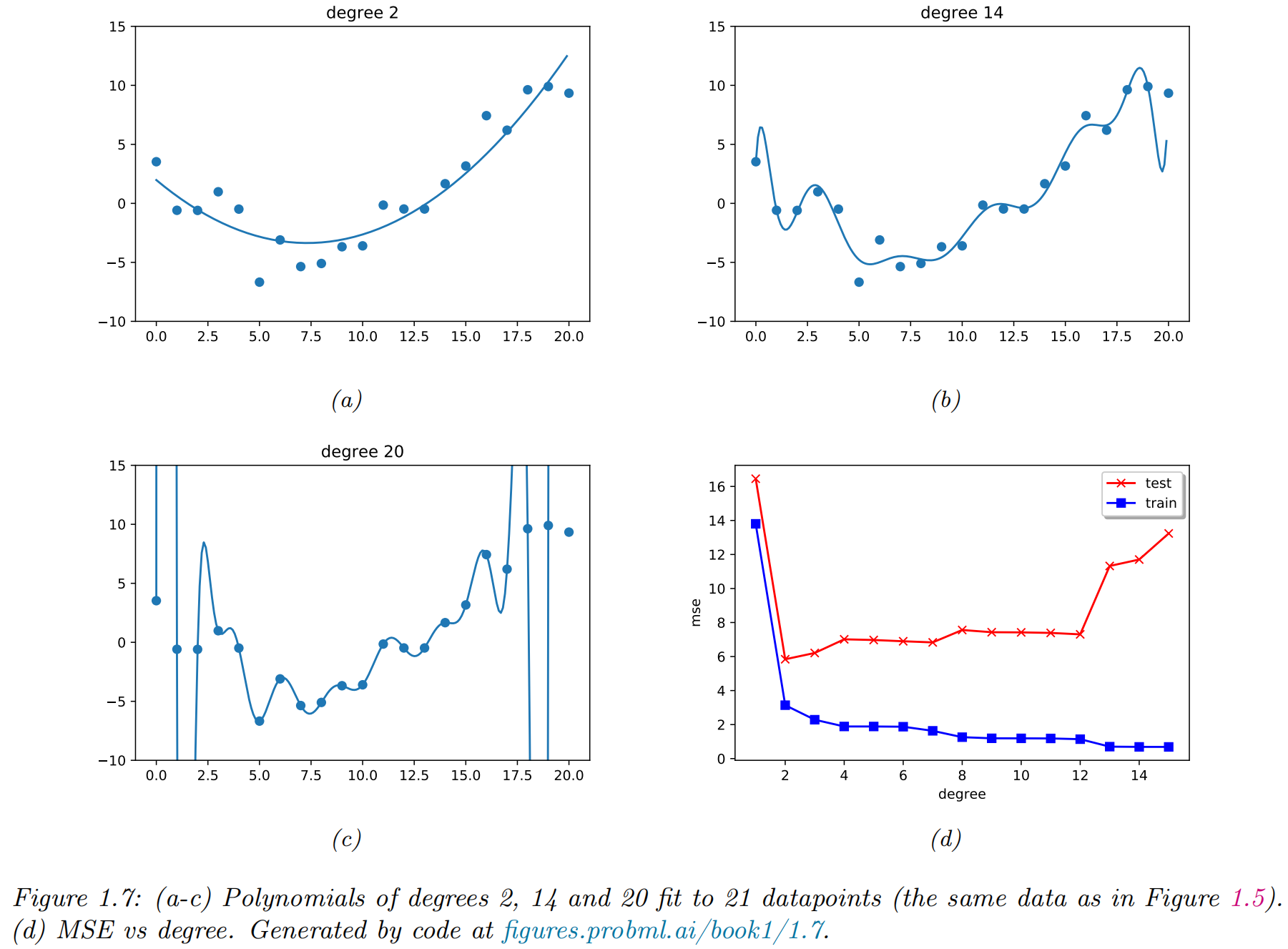

Overfitting and generalization

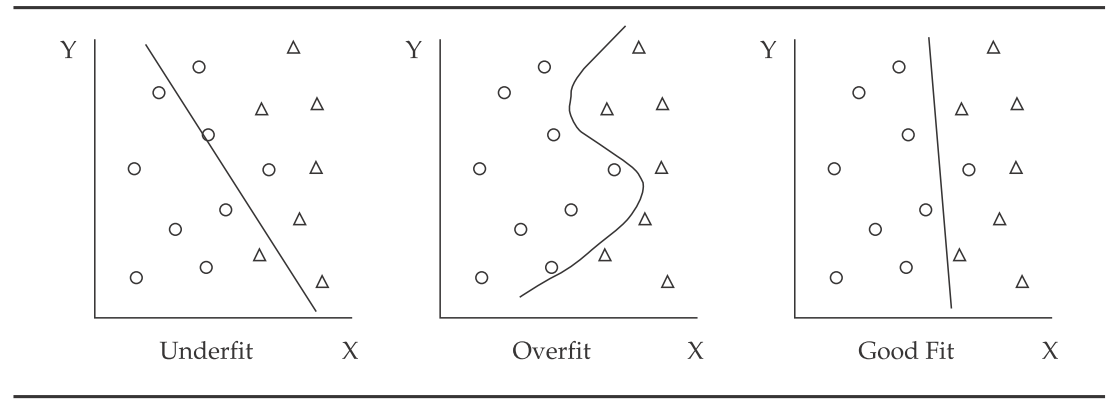

- Underfitting means the model does not capture the relationships in the data.

- Overfitting means the model begins to incorporate noise coming from quirks or spurious correlations

- it mistakes randomness for patterns and relationships

- memorized the data, rather than learned from it

- high noise levels in the data and too much complexity in the model

- complexity refers to the number of features, terms, or branches in the model and to whether the model is linear or non-linear (non-linear is more complex).

- empirical risk

- population risk

-

generalization gap:

-

test risk

Evaluating ML Algorithm Performance Errors & Overfitting

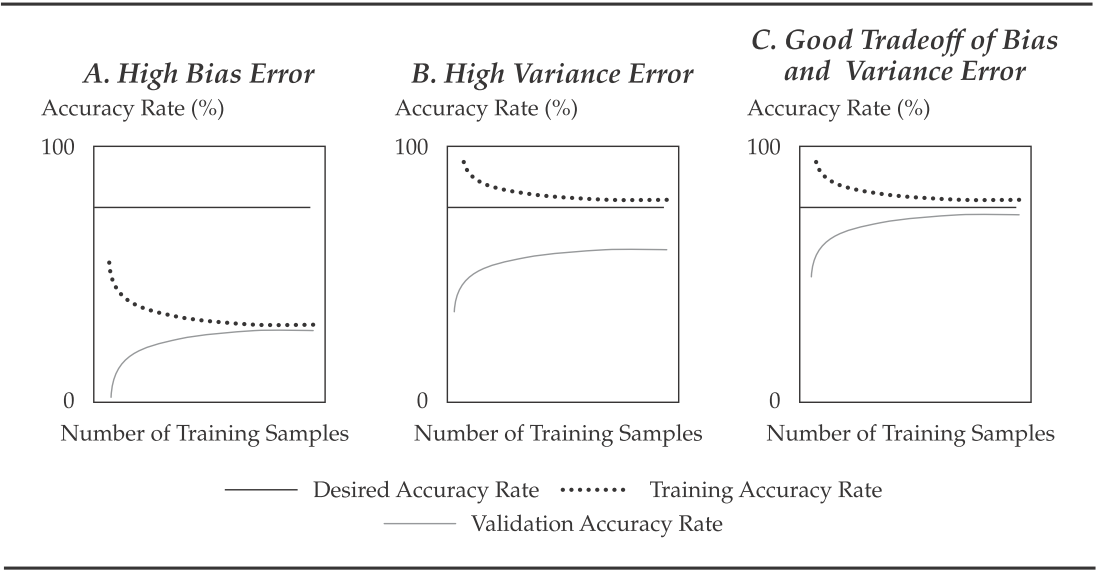

- Data scientists decompose the total out-of-sample error into three sources:

- Bias error, or the degree to which a model fits the training data. Algorithms with erroneous assumptions produce high bias with poor approximation, causing underfitting and high in-sample error.

- Variance error, or how much the model's results change in response to new data from validation and test samples. Unstable models pick up noise and produce high variance, causing overfitting

- Base error due to randomness in the data.

learning curve

- A learning curve plots the accuracy rate (= 1 – error rate) in the validation or test samples (i.e., out-of-sample) against the amount of data in the training sample

fitting curve

- A fitting curve, which shows in-and out-of-sample error rates (

Evaluating ML Algorithm Performance: Preventing Overfitting in Supervised ML

-

Two common guiding principles and two methods are used to reduce overfitting:

- preventing the algorithm from getting too complex during selection and training (regularization)

- proper data sampling achieved by using cross-validation

-

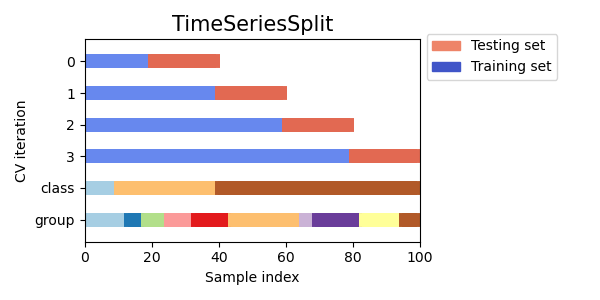

K-fold cross-validation

- data (excluding test sample and fresh data) are shuffled randomly and then are divided into k equal sub-samples

- This process is repeated

-

Leave-one-out cross-validation:

Cross-validation using sk-learn: train_test_split

|

((150, 4), (150,)) ((90, 4), (90,)) ((60, 4), (60,)) |

Cross-validation using sk-learn: computing cross-validated metrics

|

array([0.96..., 1. , 0.96..., 0.96..., 1. ]) 0.98 accuracy with a standard deviation of 0.02 array([0.96..., 1. ..., 0.96..., 0.96..., 1. ]) |

Time Series Split

|

TimeSeriesSplit(gap=0, max_train_size=None, n_splits=3, test_size=None) [0 1 2] [3] |

No free lunch theorem

All models are wrong, but some models are useful.

--- George Box

- No free lunch theorem: There is no single best model that works optimally for all kinds of problems

- pick a suitable model

- based on domain knowledge

- trial and error

- cross-validation

- Bayesian methods selection techniques

Unsupervised learning

- unsupervised learning: “inputs”

- the task: fitting an unconditional model of the form

When we’re learning to see, nobody’s telling us what the right answers are — we just look. Every so often, your mother says “that’s a dog”, but that’s very little information. You’d be lucky if you got a few bits of information — even one bit per second — that way. The brain’s visual system has 1014 neural connections. And you only live for 109 seconds. So it’s no use learning one bit per second. You need more like 105 bits per second. And there’s only one place you can get that much information: from the input itself.

--- Geoffrey Hinton, 1996

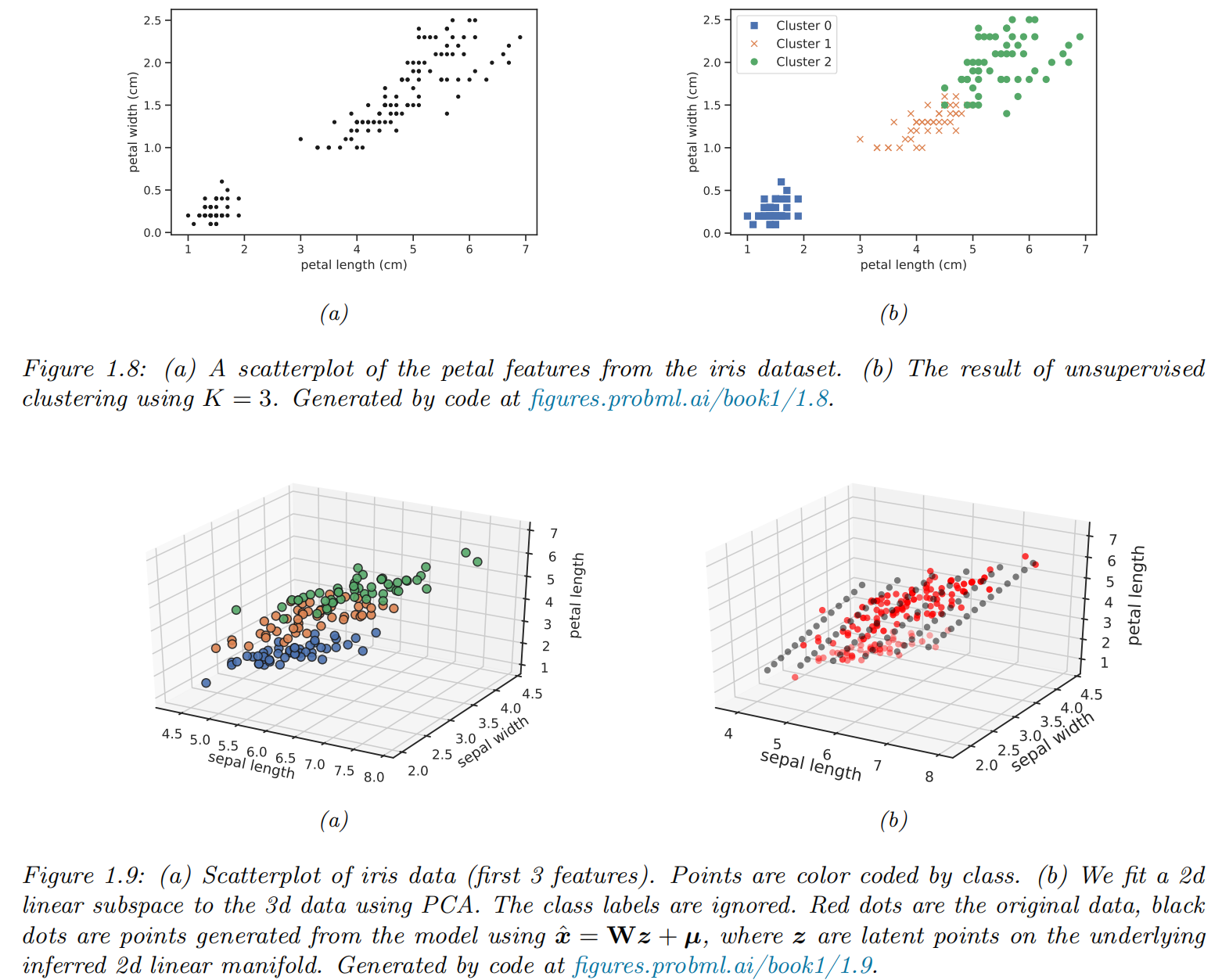

Clustering

- finding clusters in data: partition the input into regions that contain “similar” points.

Discovering latent “factors of variation”

-

Assume that each observed high-dimensional output

-

factor analysis (FA)

-

principal components analysis (PCA):

-

nonlinear models: neural networks

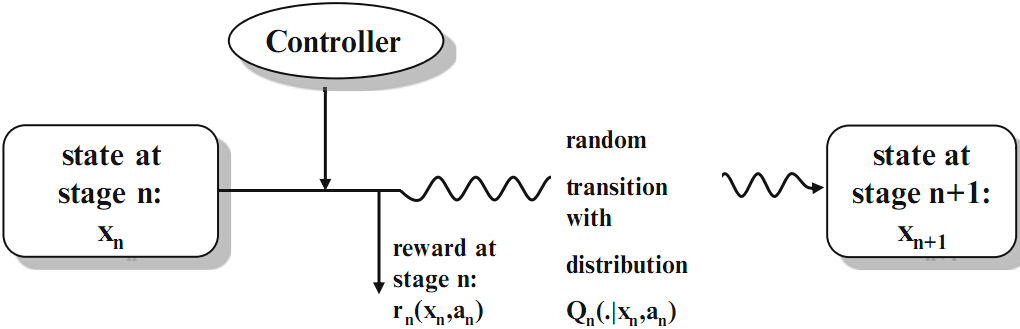

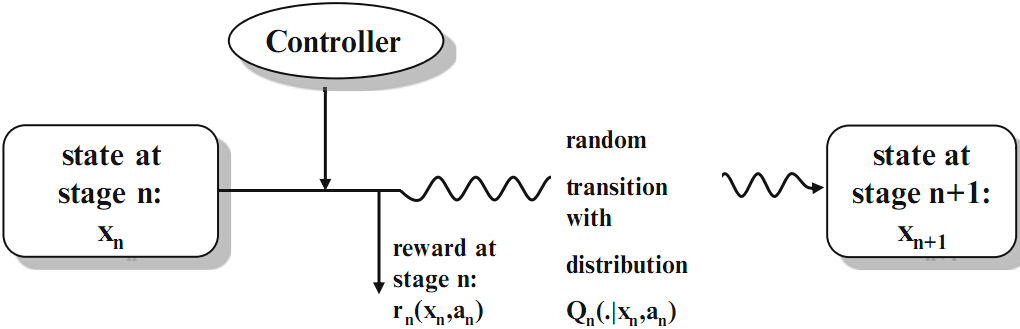

Reinforcement learning

- online / dynamic version of machine learning

- the system or agent has to learn how to interact

with its environment - RL is closely related to the Markov Decision Process (MDP)

- the system or agent has to learn how to interact

Markov Decision Process

The MDP is the sequence of random variables (

-

-

-

-

-

A control

-

Types of MDP problems:

- finite horizon (

- complete state observation vs. partial state observation

- problems with constraints vs. without constraints

- total (discounted) cost criterion vs. average cost criterion

- finite horizon (

-

Research questions:

- Does an optimal policy exist?

- Has it a particular form?

- Can an optimal policy be computed efficiently?

- Is it possible to derive properties of the optimal value function analytically?

Applications of MDP: Comsumption Problem

Suppose there is an investor with given initial capital. At the beginning of each of

Applications of MDP: Cash Balance or Inventory Problem

Imagine a company which tries to find the optimal level of cash over a finite number of

Applications of MDP: Mean-Variance Problem

Consider a small investor who acts on a given financial market. Her aim is to choose among all portfolios which yield at least a certain expected return (benchmark) after

Applications of MDP: Dividend Problem in Risk Theory

Imagine we consider the risk reserve of an insurance company which earns some premia on the one hand but has to pay out possible claims on the other hand. At the beginning of each period the insurer can decide upon paying a dividend. A dividend can only be paid when the risk reserve at that time point is positive. Once the risk reserve got negative we say that the company is ruined and has to stop its business. Which dividend pay-out policy maximizes the expected discounted dividends until ruin?

Applications of MDP: Bandit Problem

Suppose we have two slot machines with unknown success probability

Applications of MDP: Pricing of American Options

In order to find the fair price of an American option and its optimal exercise time, one has to solve an optimal stopping problem. In contrast to a European option the buyer of an American option can choose to exercise any time up to and including the expiration time. Such an optimal stopping problem can be solved in the framework of Markov Decision Processes.

Discussion

Statistical inference vs. Supervised machine learning

| Property | Statistical inference | Supervised machine learning |

|---|---|---|

| Goal | Causal models with explanatory power | Prediction performance, often with limited explanatory power |

| Data | The data is generated by a model | The data generation process is unknown |

| Framework | Probabilistic | Algorithmic and Probabilistic |

| Expressibility | Typically linear | Non-linear |

| Model selection | Based on information criteria | Numerical optimization |

| Scalability | Limited to lower-dimensional data | Scales to high-dimensional input data |

| Robustness | Prone to over-fitting | Designed for out-of-sample performance |

| Diagnostics | Extensive | Limited |

Financial Econometrics and Machine Learning

Dixon M F, Halperin I, Bilokon P. Machine learning in Finance[M]. Springer International Publishing, 2020.

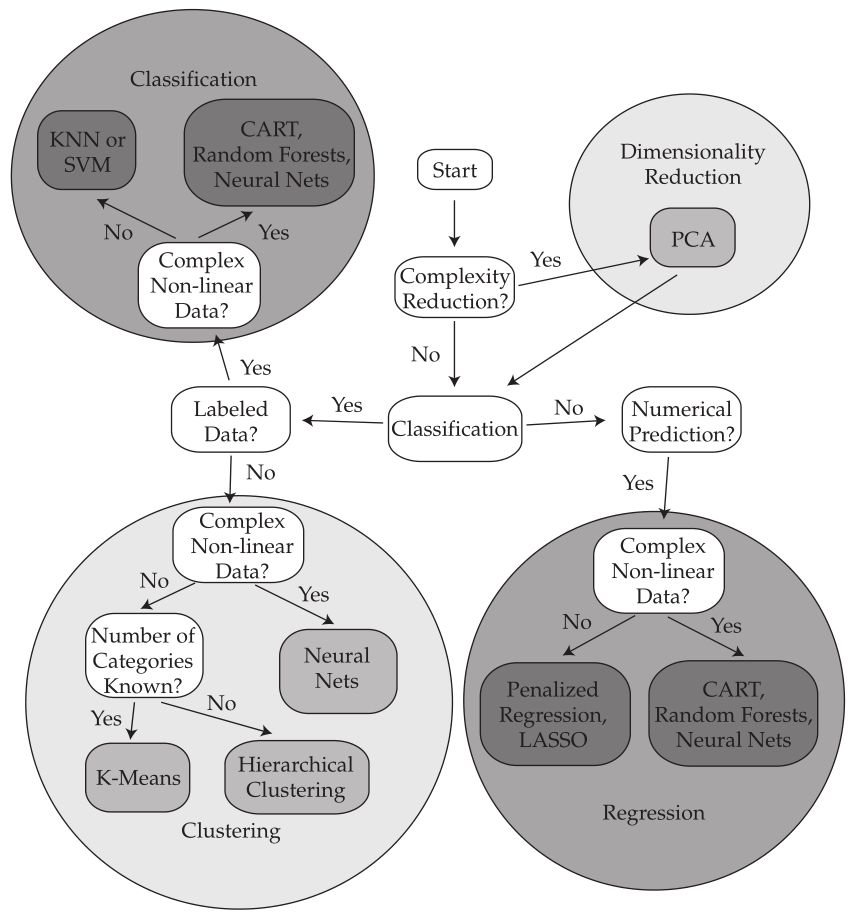

ML Algorithm Types

Selecting ML Algorithms

Useful Python Libraries

Popular Data Science Libraries in Python

Math Libraries

Statistical Libraries

ML and Deep Learning

Decision Theory

Bayesian decision theory

Basics

The decision maker, or agent, has a set of possible actions,

- The posterior expected loss or risk for each possible action:

- The optimal policy (also called the Bayes estimator) specifies what action to take for each possible observation so as to minimize the risk:

- Let

Classification problems

We use Bayesian decision theory to decide the optimal class label to predict given an observed input

Zero-one loss

Suppose the states of nature correspond to class labels, so

- the zero-one loss

- the posterior expected loss

- the optimal policy

It corresponds to the mode of the posterior distribution, also known as the maximum a posteriori or MAP estimate

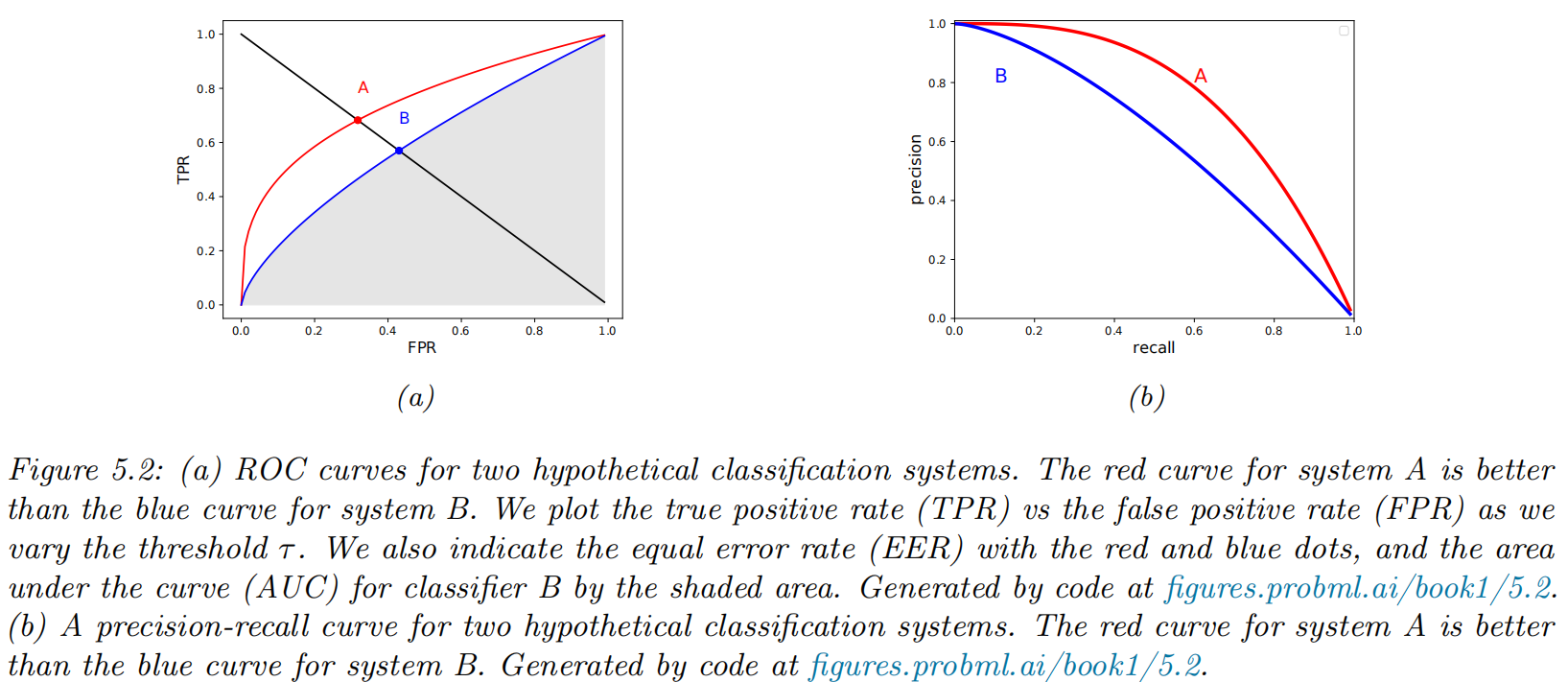

ROC curves

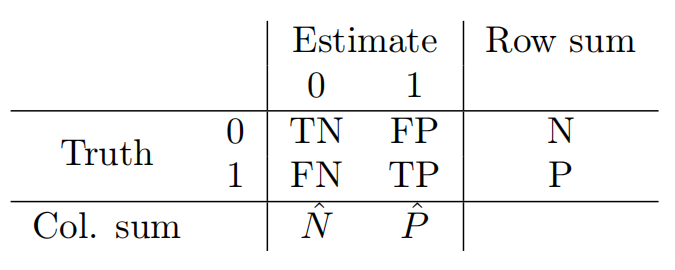

Class confusion matrices

For any fixed threshold

- The empirical number of false positives (FP) that arise from using this policy on a set of N labeled examples:

- The empirical number of false negatives (FN)

- The empirical number of true positives (TP)

- The empirical number of true negatives (TN)

- the true positive rate (TPR), also known as the sensitivity, recall or hit rate

- the false positive rate (FPR), also called the false alarm rate, or the type I error rate

- We can now plot the TPR vs FPR as an implicit function of

Summarizing ROC curves as a scalar

- Area Under the Curve (AUC)

- higher AUC scores are better

- the maximum is 1

- Equal Error Rate (EER) or cross-over rate

- defined as the value which satisfies FPR = FNR

- lower EER scores are better

- the minimum is 0

Precision-recall curves

Computing precision and recall

- the precision:

- the recall

- If

Summarizing PR curves as a scalar

- the precision at K score: quote the precision for a fixed recall level, such as the precision of the first K = 10 entities recalled

- the interpolated precision: compute the area under the PR curve

- the average precision: the average of the interpolated precision, which is equal to the area under the interpolated PR curve

- the mean average precision or mAP: the mean of the AP over a set of different PR curves

F-scores

- Definition

- A special case:

Regression problems

We assume the set of actions and states are both equal to the real

line,

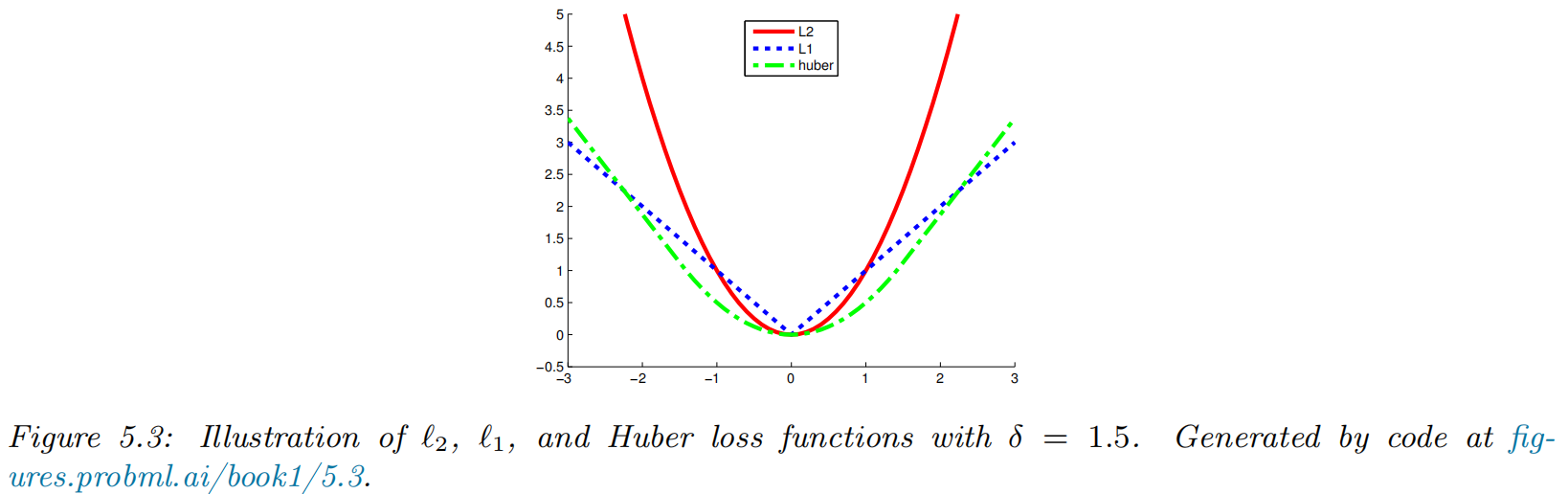

L2 loss

- the

- the risk function

- the minimum mean squared error estimate or MMSE estimate

- The

L1 loss

- the

- the optimal estimate is the posterior median

Huber loss

Let

Probabilistic prediction problems

We assume the true “state of nature” is a distribution,

KL, cross-entropy and log-loss

A common form of loss functions for comparing two distributions is the Kullback Leibler divergence, or KL divergence, which is defined as follows:

-

The KL is the extra number of bits we need to use to compress the data due to using the incorrect distribution

-

minimize the cross-entropy

Proper scoring rules

- proper scoring rule: a loss function

- Brier score:

Choosing the "right" model

Bayesian hypothesis testing

- two hypothesis / model

- null hypothesis:

- alternative hypothesis:

- 0-1 loss

- Bayes factor: the ratio of marginal likelihoods of the two models

- null hypothesis:

| Bayes factor |

Interpretation |

|---|---|

| Decisive evidence for |

|

| Strong evidence for |

|

| Moderate evidence for |

|

| Weak evidence for |

|

| Weak evidence for |

|

| Moderate evidence for |

|

| Strong evidence for |

|

| Decisive evidence for |

Example: Testing if a coin is fair

- test if a coin is fair

- marginal likelyhood under

Bayesian model selection

- Model Selection: pick a most apprppriate model from a set

- posterior

- uniform prior

- marginal likelyhood

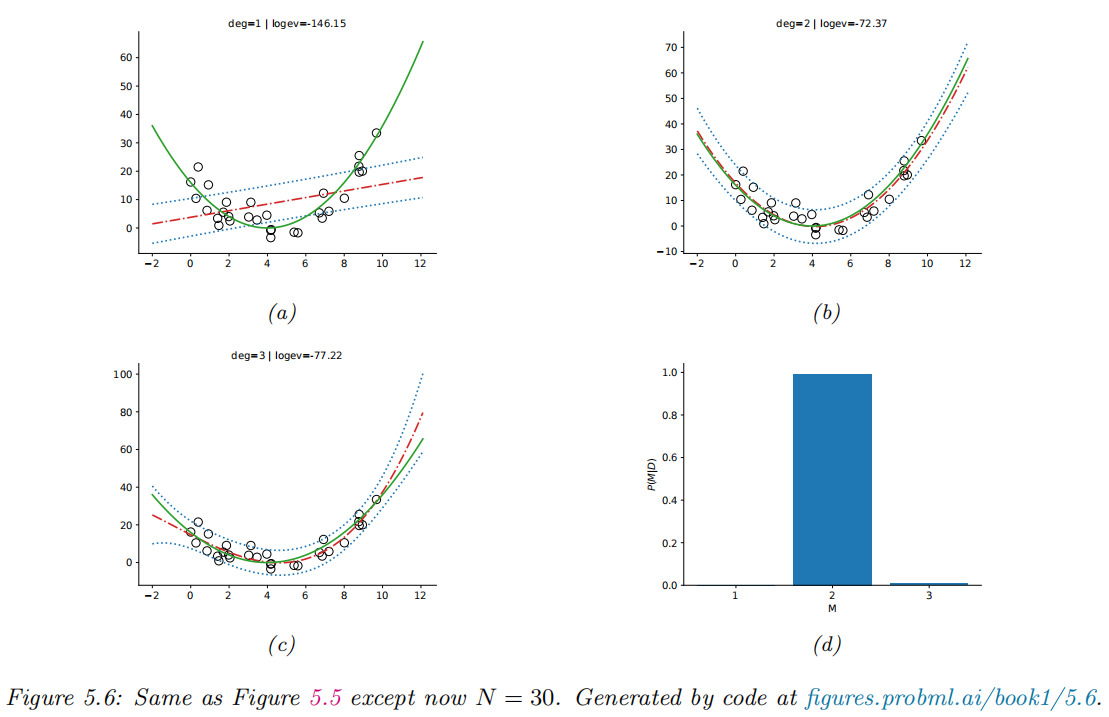

Example: polynomial regression

Occam's razor

-

Occam’s razor: the simpler the better (for the same marginal likelyhood)

-

Bayesian Occam’s razor effect: the marginal likelihood will prefer the simpler model.

Connection between cross validation and marginal likelihood

Marginal likelihood is closely related to the leave-one-out cross-validation (LOO-CV) estimate.

where

Suppose we use a plugin approximation to the above distribution to get

Then we get

Information criteria

- the marginal likelyhood can be difficult to compute

- the result can be quite sensitive to the choice of prior

The Bayesian information criterion (BIC)

- The Bayesian information criterion or BIC can be thought of as a simple approximation to the log marginal likelihood.

where H is the Hessian of the negative log joint

- Assuming uniform prior,

- the BIC score

- the BIC loss

Akaike information criterion

- This penalizes complex models less heavily than BIC, since the regularization term is independent of

- This estimator can be derived from a frequentist perspective.

Minimum description length (MDL)

Frequentist decision theory

Computing the risk of an estimator

We define the frequentist risk of an estimator π given an unknown state of nature

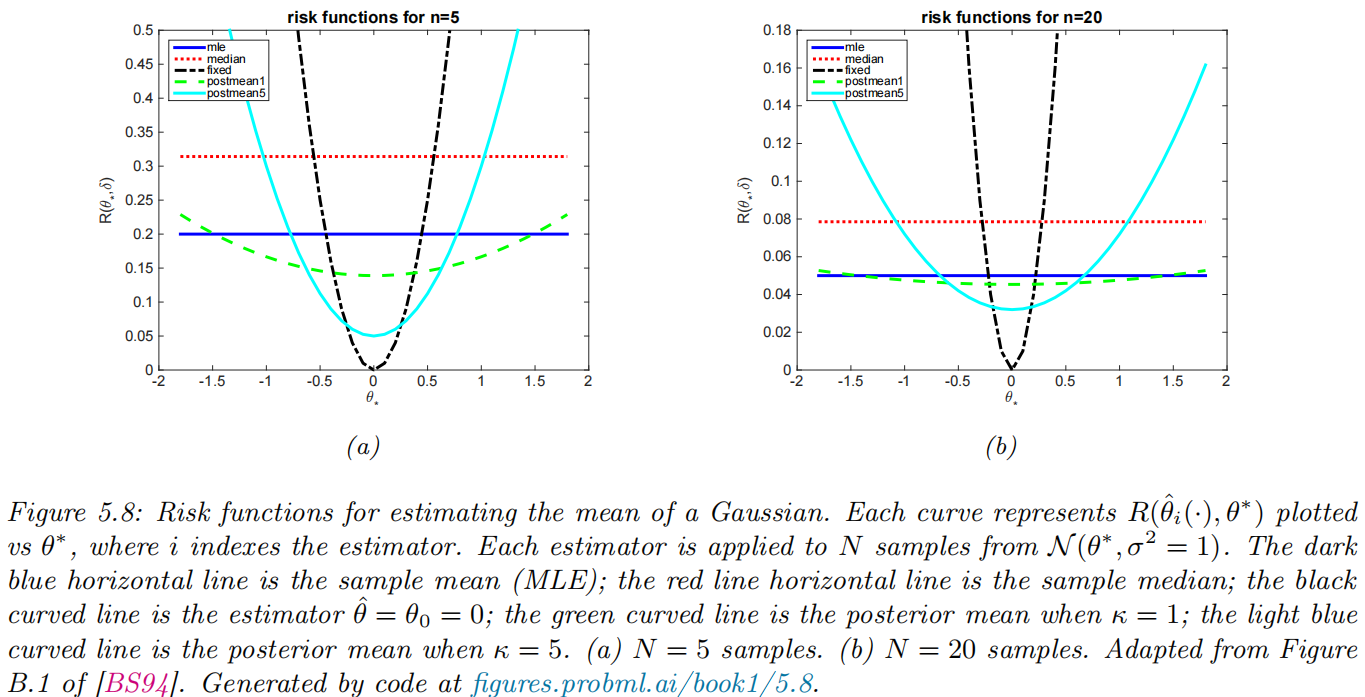

Example: estimate a Gaussian mean

- assume the data is sampled from

- quandratic loss:

- risk function: MSE

- 5 different estimators for computing

- sample mean:

- sample median:

- a fixed value:

- weak case:

- strong case:

- weak case:

- sample mean:

- MSE:

- sample mean:

- sample median:

- fixed value:

- postior:

Bayes risk

- Bayes risk (integrated risk):

- Bayes estimator

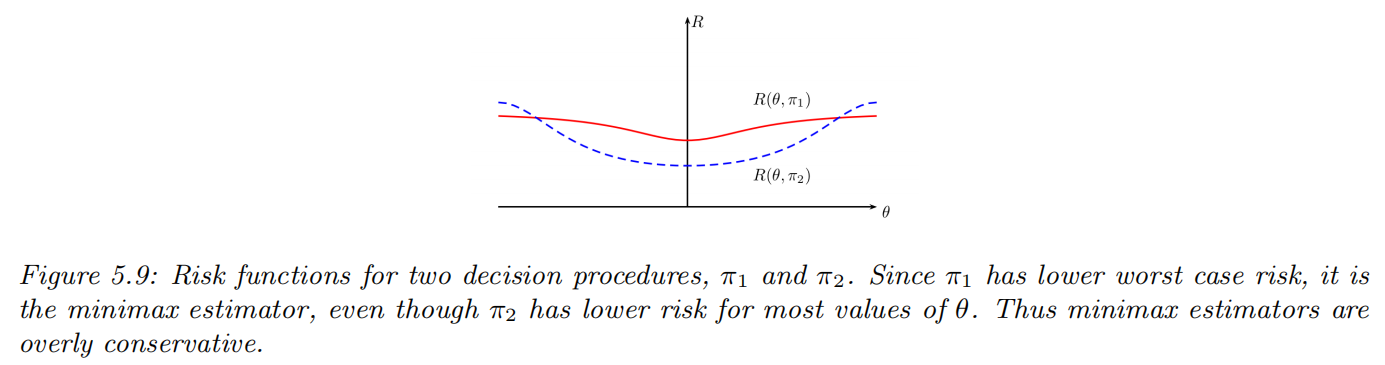

Maximum risk

- Maximum risk

Empirical risk minimization

Empirical risk

- Population risk

- Empirical risk

- Empirical distribution

- Empirical risk

- Empirical risk minimization (ERM)

Approximation error vs estimation error

- Notations

- Error decomposition: approximation error (

- generalization gap

Regularized risk

- regularized empirical risk

- parametric function form

- log loss, negative log prior regularizer

Minimizing this is equivalent to MAP estimation.

Structural risk

- how to minimize empirical risk?

- It does not work (optimism of the training error)

-

structural risk minimization: If we knew the regularized population risk

-

two methods to estimate the population risk for a given model (value of

- cross-validation

- statistical learning theory

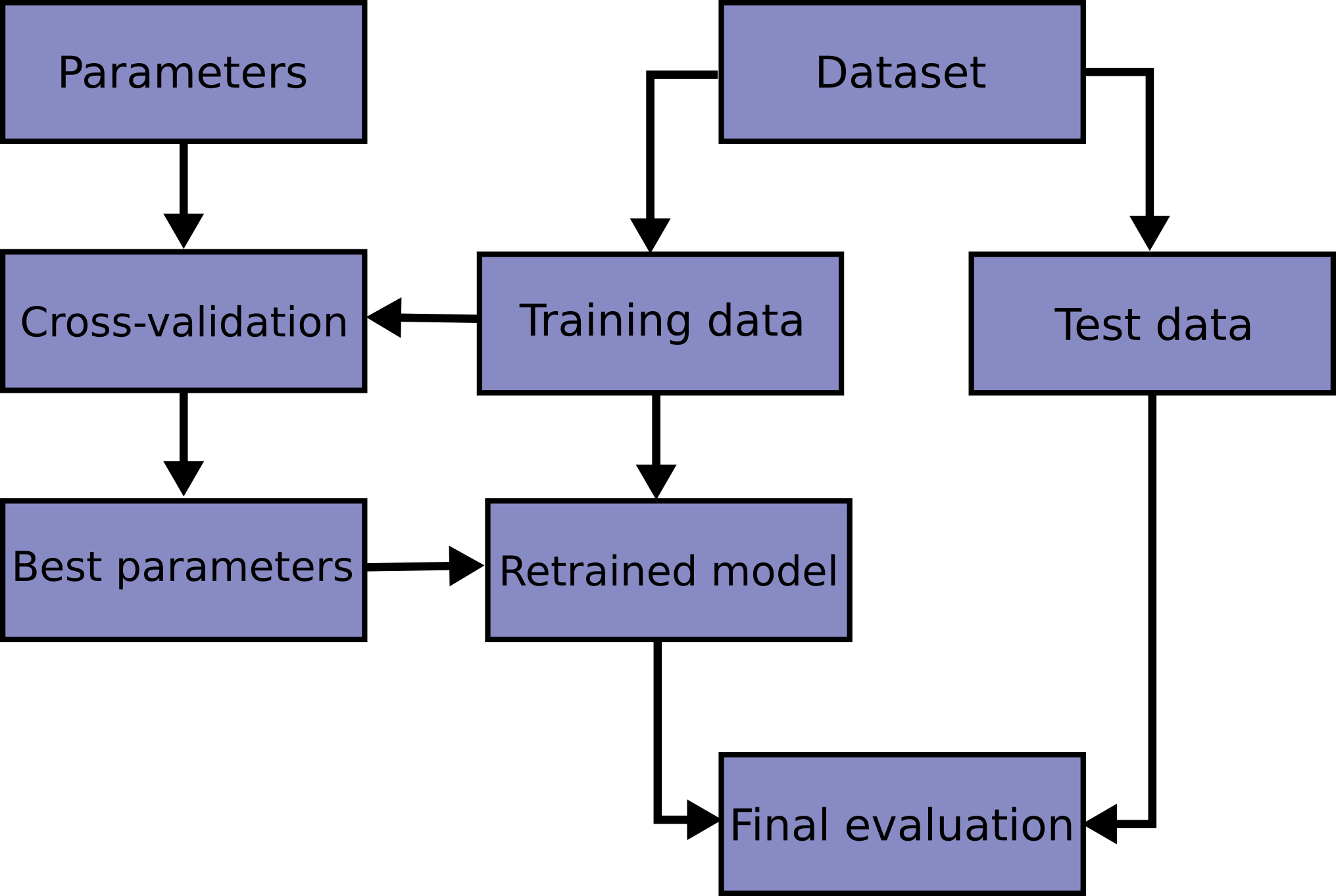

Cross-validation

- partition the dataset into two

- training set

- validation set

- training set

- the empirical risk on the dataset

- validation risk: use the unregularized empirical risk on the validation set as an estimate of the population risk

- K-folds cross validation (CV):

- split the training data into K folds

- for each fold

- test on the k’th

- cross-validated risk

- optimal parameters

- optimal hyperparameters:

- optimal model parameters:

- optimal hyperparameters:

Information Theory

Entropy

The entropy of a probability distribution can be interpreted as a measure of uncertainty, or lack of predictability, associated with a random variable drawn from a given distribution. We can also use entropy to define the information content of a data source.

| Entropy of |

Hard/Easy to predict |

Information content of |

|---|---|---|

| high | hard | high |

| low | easy | low |

Entropy for discrete random variables

The entropy of a discrete random variable

- When we use log base 2, the units are called bits (short for binary digits).

- When we use log base

Cross entropy

The cross entropy between distribution

The cross entropy is the expected number of bits needed to compress some data samples drawn from distribution

Joint entropy

The joint entropy of two random variables

For example, consider choosing an integer from 1 to 8,

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | |

| 0 | 1 | 1 | 0 | 1 | 0 | 1 | 0 |

The joint distribution is

so the joint entropy is given by

Consider the marginal entropys:

We observe that

In general, the above inequality is always valid. If X and Y are independent, then

Another observation is that

this says combining variables together does not make the entropy go down: you cannot reduce uncertainty merely by adding more unknowns to the problem, you need to observe some data.

Conditional entropy

The conditional entropy of

It is straight forward to verify that:

Perplexity

The perplexity of a discrete probability distribution

It is often interpreted as a measure of predictability. Suppose we have an empirical distribution based on data

We can measure how well

Differential entropy for continuous random variables *

If

Differential entropy can be negative since pdf’s can be bigger than 1.

Example: Entropy of a Gaussian

The entropy of a d-dimensional Gaussian is

In the 1d case, this becomes

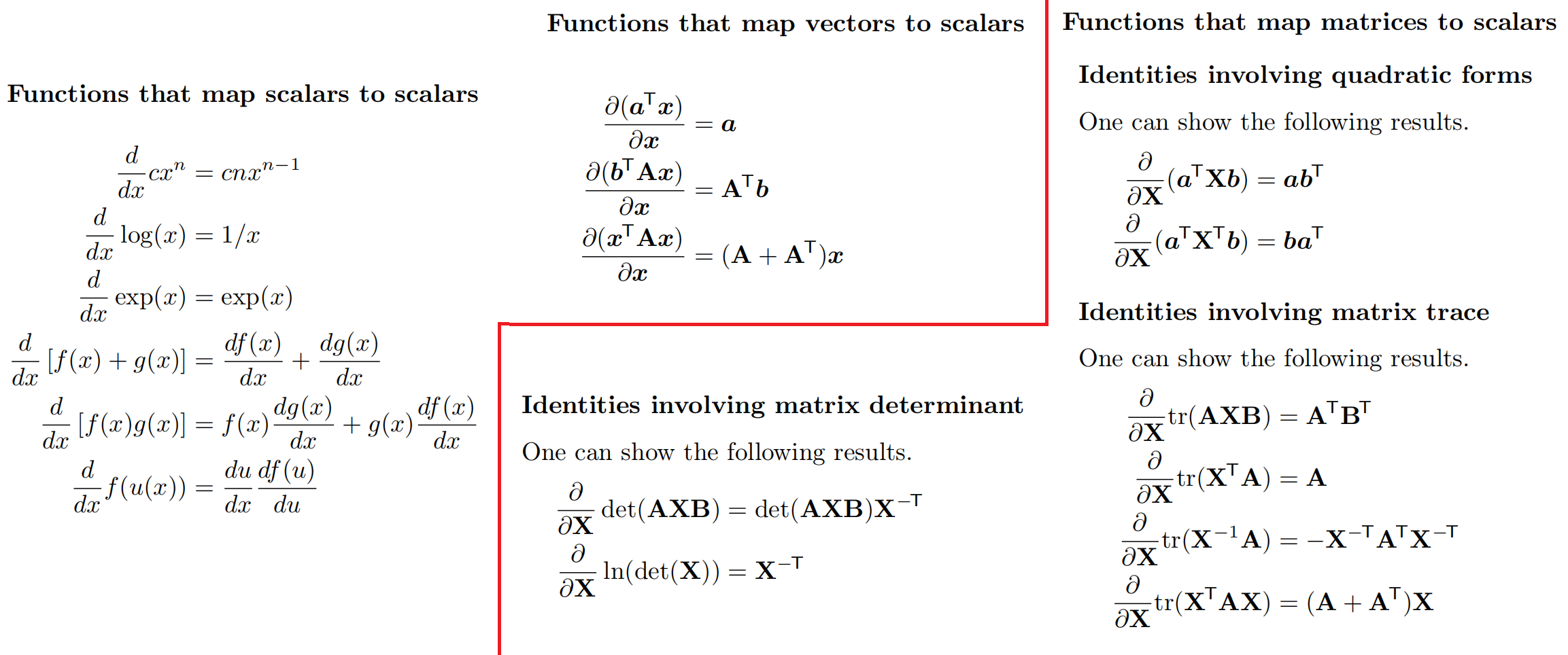

Linear Algebra

Matrix calculus

Derivatives

Gradients

- Patial derivative:

- Gradient:

- Gradient evaluated at point

Directional derivative

The directional derivative measures how much the function

Note that the directional derivative along

Total derivative

Suppose that some of the arguments to the function depend on each other. Concretely, suppose the function has the form

If we multiply both sides by the differential

This measures how much f changes when we change

Jacobian

Consider a function that maps a vector to another vector,

Multiplying Jacobians and vectors

The Jacobian vector product or JVP is defined to be the operation that corresponds to right multiplying the Jacobian matrix

So we can see that we can approximate this numerically using just 2 calls to

The vector Jacobian product or *VJP is defined to be the operation that corresponds to left-multiplying the Jacobian matrix

Jacobian of a composition

Let

Hessian

For a function f : Rn → R that is twice differentiable, we define the Hessian matrix as the (symmetric)

The Hessian is the Jacobian of the gradient.

Gradients of commonly used functions